Arthur C. Clarke’s famous quote states:

“Any sufficiently advanced technology is indistinguishable from magic.”

It always bothered me.

The way this quote is commonly used is somewhat patronizing. Because it usually implies that someone else might mistake a new tech for magic. But not us. The experience of true astonishment is never first-person. No matter how much admiration we might have.

Have you ever used an app or a device and screamed: “What sort of sorcery is this?”

Well, neither have I.

But then OpenAI released the DALL·E 2 text-to-image generator for testing. And now I’m very confused.

Basically, all you have to do is describe what you want to see in natural language. And the AI model will use its neural networks to generate some images. Like a magic mirror.

If you could see anything in the world, anything at all, what would it be?

Like any sane person, I knew immediately what was my wish—didn’t have to think about it twice. Because deep down we know that we all want to see it.

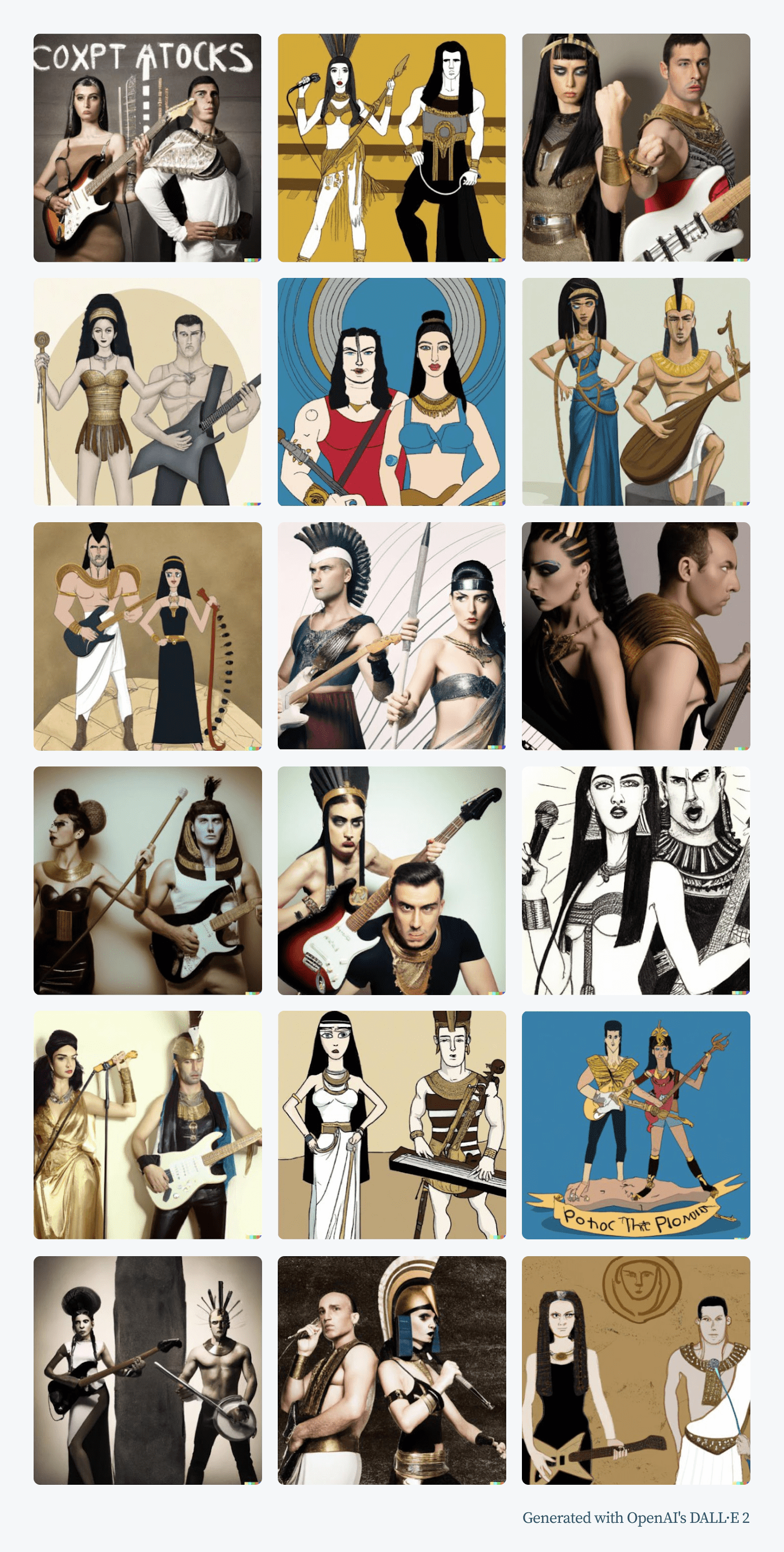

My prompt was: Cleopatra and Mark Antony start a rock band.

Here’s what DALL·E 2’s artificial intelligence came up with:

Mindblowing!

This thing is uncanny. And highly addictive.

Sure, some of the images generated by the AI are rough around the edges. And there is a bit of dream logic at work here too—you may have noticed that the texts generated along the images are pure gibberish. But with a little fine-tuning, the images produced by OpenAI’s DALL·E 2 could easily end up on the cover of Mark and Cleo’s debut album.

Now, this raises some important questions—

If I was the one who came up with the idea, does that make me the author? Will writing prompts become some sort of new art form? Is that how people will create art, images, and movies in the future? And, if so, does it mean that we’ll all become artists? Or will artificial intelligence become some sort of the ultimate creative force and people will become mere curators of what it creates?

Personally, I can’t wait till this technology becomes more mature and publicly available. So far, navigating inside AI dreams has been a very fun experience. But what do others think?

Text-to-image generators: opportunities and dangers

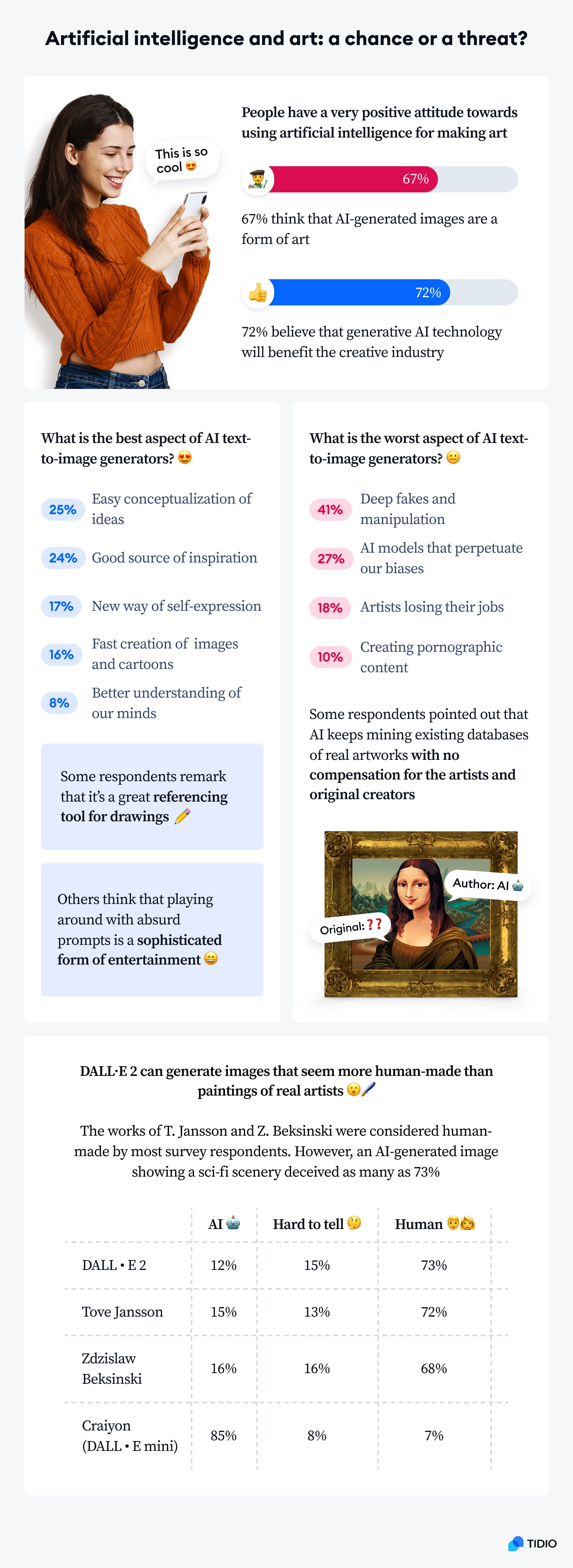

We conducted a survey and asked internet users, artists, and AI enthusiasts for their opinion on the relationship between AI and art.

We’ve also shown them a selection of images and asked them if they can decide if a painting or a photo was AI-generated.

Some of the images created with OpenAI were voted as more authentic than real paintings (!)

It turned out that a surrealist painting from the 80s was voted as painted by a human by 68% of people surveyed. At the same time, a painting generated with DALL·E 2 was judged by 73% of them as a human-made artwork.

And here’s what else we found out.

- Almost 67% believe that AI-generated images are a form of art

- Only 9% of respondents said it was easy to decide if images were AI-generated or created by artists (spoilers—most of them still made mistakes)

- According to our survey respondents, the biggest pros of generative AI technology are easy conceptualization of ideas and helping to find inspiration

- Many respondents are worried about the misuse of AI to create deep fakes

- Some respondents are concerned about training AI models on data that will perpetuate our biases

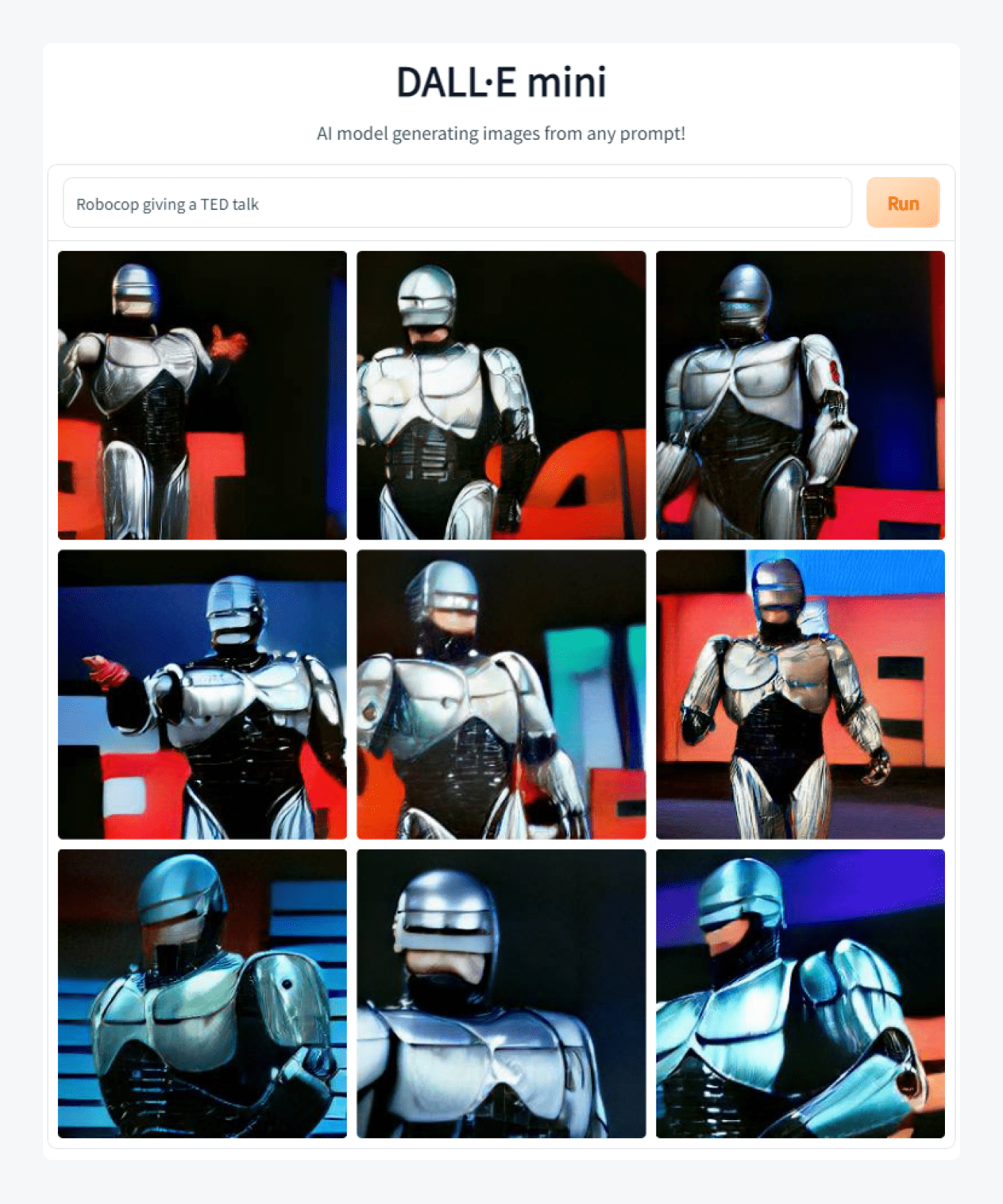

It’s very interesting that more than half of the respondents got tricked and took the image created by AI for a real painting. However, with some examples, the task was easier. Images created with the now popular Craiyon app (previously known as DALL·E mini, although not endorsed or connected with OpenAI’s project) didn’t fool anyone and the vast majority of respondents recognized them as AI.

It’s easy to see why.

Whether a particular image generated by artificial intelligence looks convincing to us depends on many factors. These include the complexity of the scene and how ambiguous the prompts are, as well as the type of AI model chosen to generate the image.

AI text-to-image technology can potentially be used for:

- Creating illustrations and concept arts

- Generating cartoon character designs

- Making animation and comic book model sheets

- Producing realistic matte paintings and backgrounds

- Designing logos and book covers

- Generating stock and product photography

- Brainstorming ideas for any works related to visual arts

- Preparing visualizations of sculptures, architecture, and interior designs

So, are professional artists going to be out of work?

Not necessarily.

However, people doing the tasks mentioned above as part of their work should keep their eye out for AI text-to-image tools. Some of them may soon be able to use AI to make their work easier and faster.

But it also means that there will soon be a new generation of artists and designers who have a radically different approach to the creative process. Designing and sketching things manually and rendering images may become less relevant than it is now. On the other hand, being able to describe to generative AI models what you want to see could become a high-demand skill on the job market.

This technology is so powerful that it can transform the whole creative industry as we know it. But it also makes the issue of authorship much more complicated. After all, AI models use existing works for training!

So—

Will a phrase like “this image is subject to copyright laws and may not be used for training AI models” become a new standard?

It can also take the opposite turn, and creating high-quality drawings and paintings solely for the purpose of training AI models in different “styles” will become a new lucrative industry. The users would then use AI to create images and pay licesing fees to artists for borrowing their style.

Learn how to boost your sales using AI

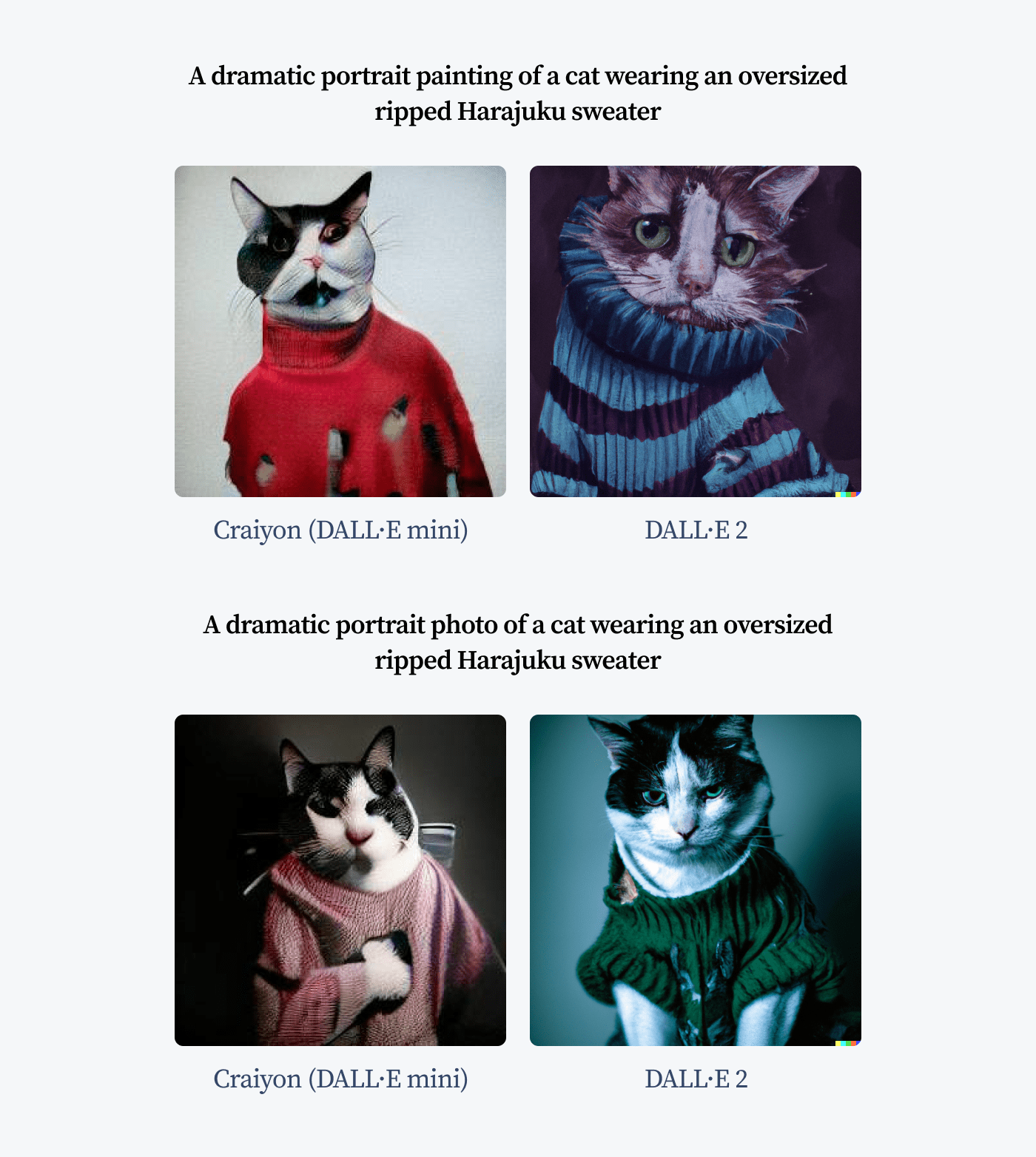

Let’s go through some more examples of AI text-to-image photos and illustrations to get the feel of what exactly we’re dealing with.

Examples of images generated with DALL·E 2 and other generative AI

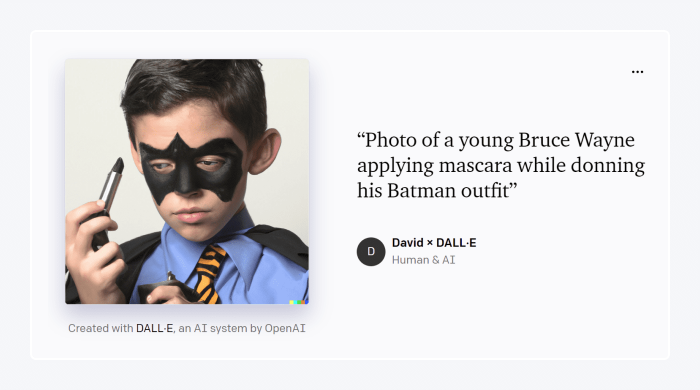

From the user’s perspective, creating images with text-to-image generators is extremely easy. You simply type in your prompt—a description of what you want to appear in the picture—and hit the button. The AI generator takes care of the rest, creating an image or a set of images that render the prompt.

Some of the popular AI art generators use Discord servers where you can send your prompt as a regular massage. You can just use a command in a special channel and, after a while, a Discord bot will generate the image and post it.

Here are some interesting AI-generated images.

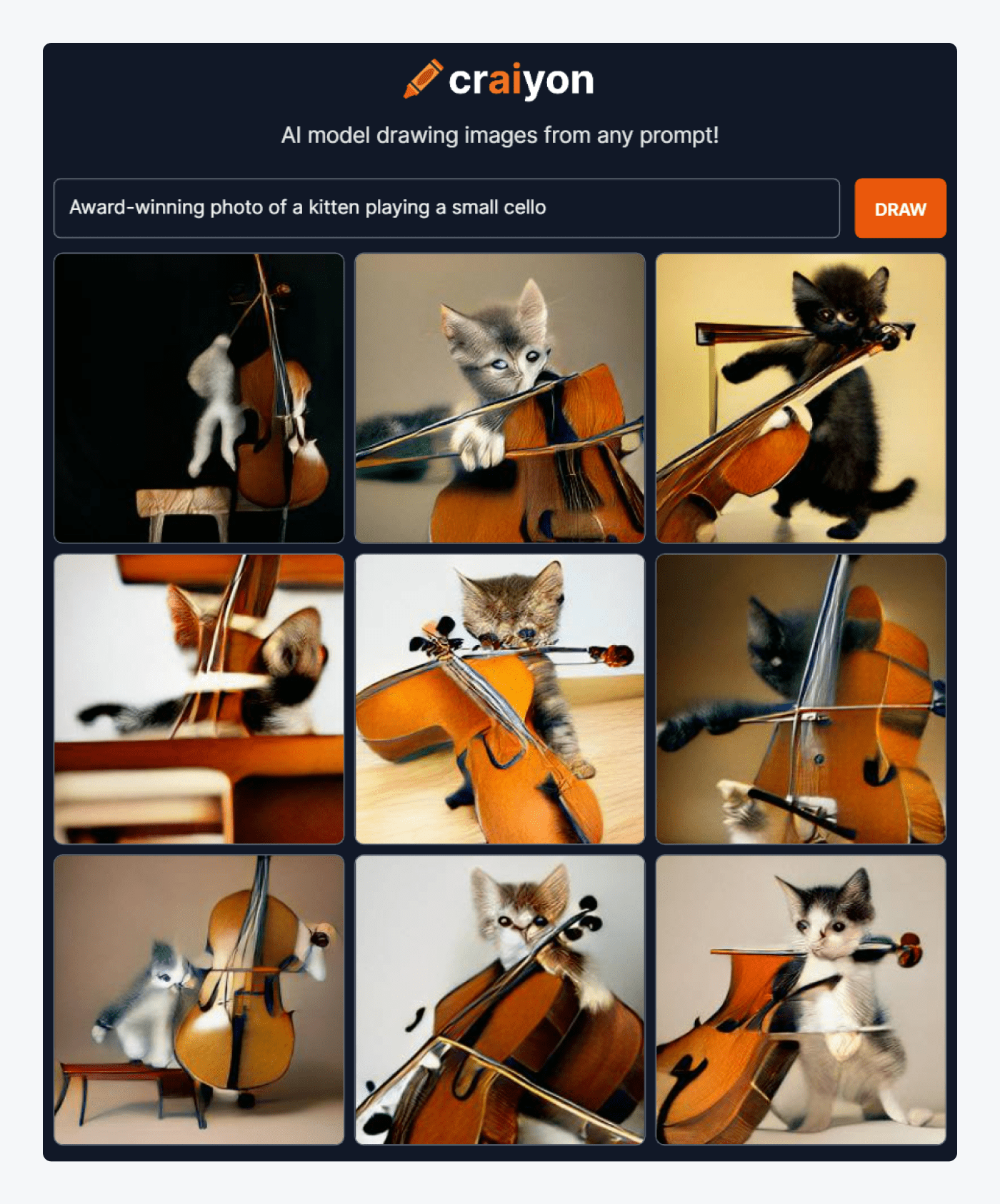

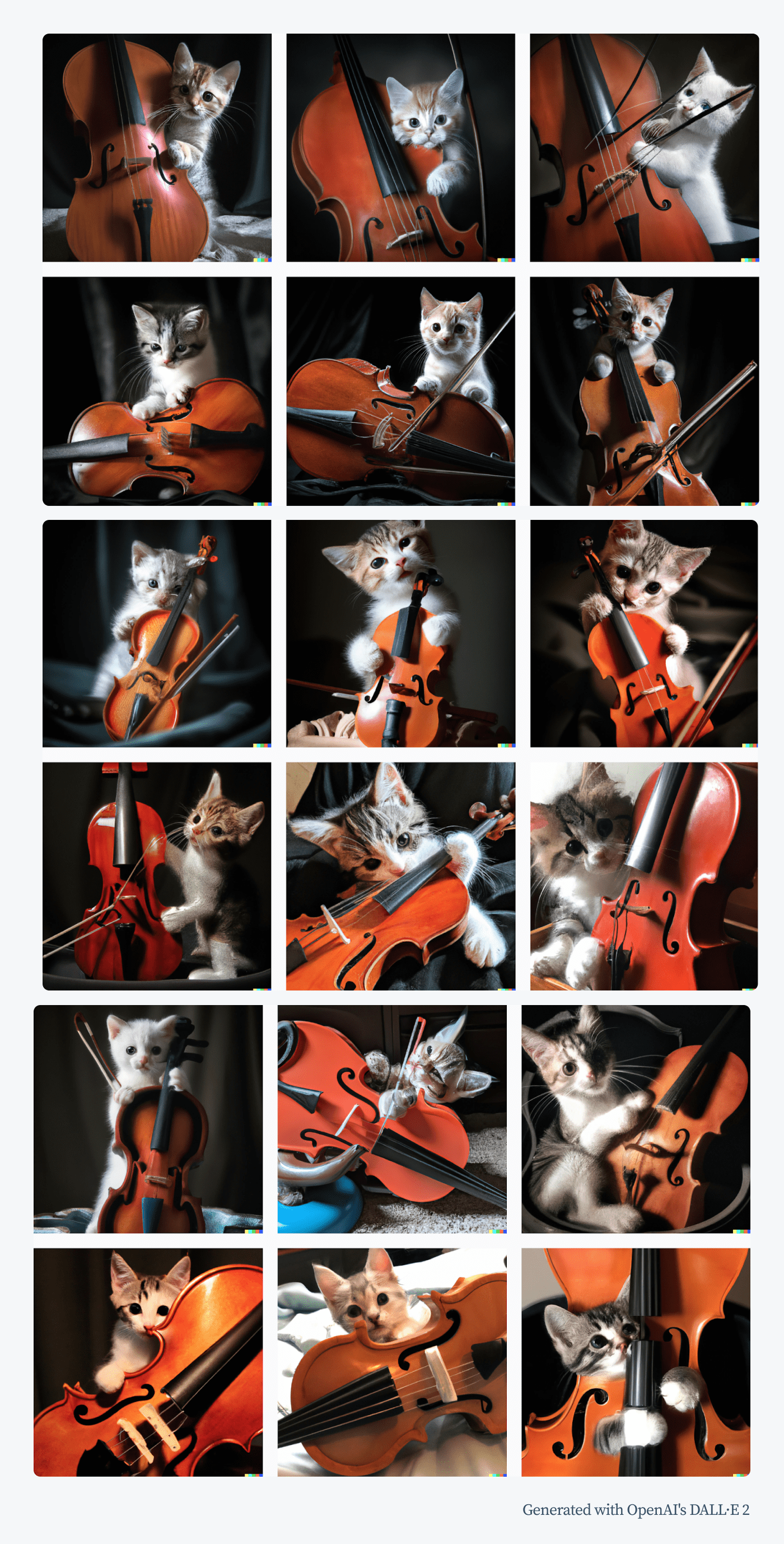

1. Cats playing violins and tiny cellos

Testing whether artificial intelligence would be able to create a cat was one of the main AI tests of an experiment we conducted some time ago. The technology we used previously did not do a very good job of creating a cat in a natural pose.

Before we knew it, however, there were new tools that can handle typical depictions but also more complex scenes.

Cats are not in the habit of playing musical instruments (and if they do, they usually don’t do it very well). Such an unusual scene should be challenging for generative AI models because there probably weren’t too many reference photos of cats playing cellos in the training datasets.

Let’s see what a cat playing the cello looks like according to the Craiyon app.

Award-winning they are most certainly not. To be fair though, the cats do seem to play their instruments.

Now, OpenAI’s DALL·E 2 tackled the task much better.

Whether cats actually play these instruments is still debatable. But certainly, the images created are of much better quality. The AI also has some difficulty with rendering the correct form of the instruments.

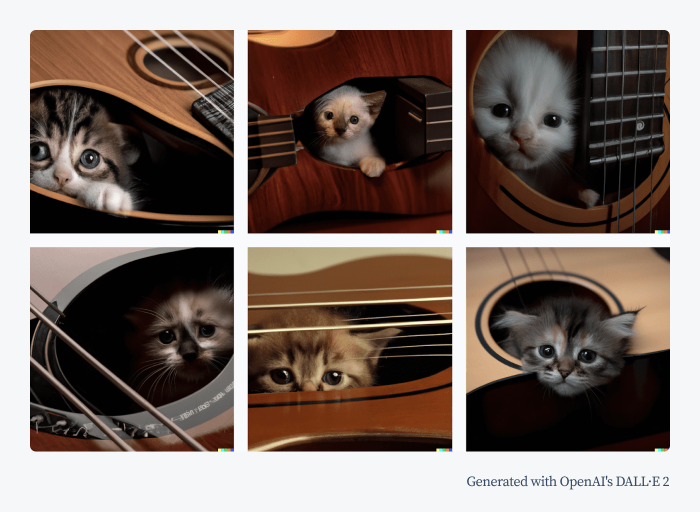

These problems become even more apparent when you try to put a cat inside the instrument. Since the violin is too small to house a cat, let’s change the instrument to a guitar.

Before the animal protection organizations intervene—no cats were harmed while making these AI experiments.

Let’s try some dogs now.

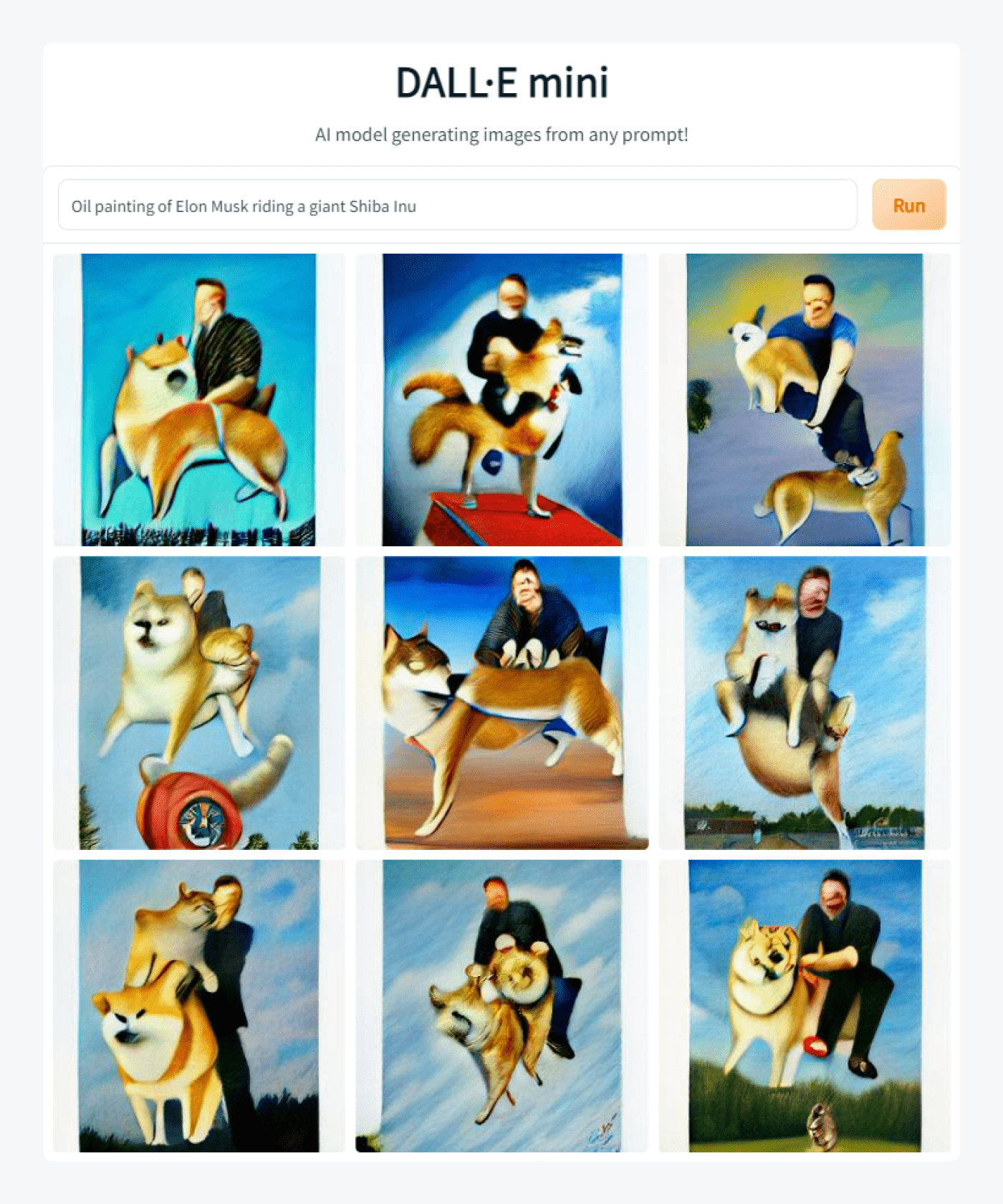

2. Elon Musk and puppies

Most publicly available text-to-image generators are not very good at interpreting complex prompts. Suppose we wanted to create a caricature for an article about Elon Musk and cryptocurrencies. If we want to generate a picture of Elon riding a Shiba Inu dog then Craiyon will not suffice.

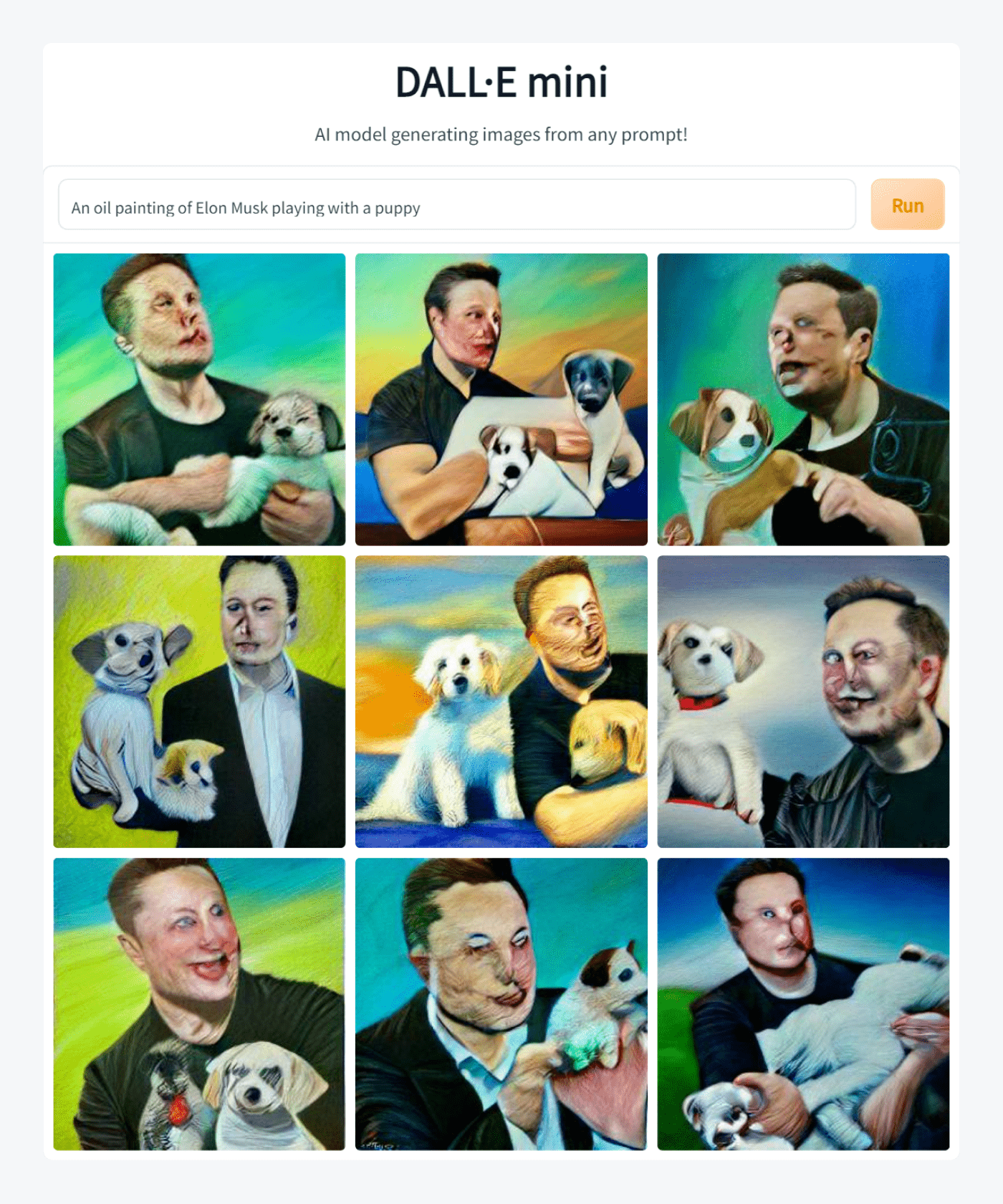

It sort of works. But the resolution is too low to actually tell if it is Elon Musk. Let’s try a different prompt.

While the rendering is not the best, we actually get the likeness of Elon Musk conveyed quite well.

Now, let’s try using DALL·E 2 with a neo-classical oil painting of Elon Musk dressed as Napoleon riding a big Shiba Inu dog through a battlefield.

I know what you are thinking—this guy doesn’t look like Elon.

And you are absolutely right.

If there’s one thing this AI doesn’t do is render likenesses of famous people. But it is so by design. The algorithm modifies facial features so that users won’t generate deep fake images with existing people. Celebrities are a no-no.

Homer Simpson will look like Homer Simpson, but Boris Johnson won’t really look like himself. That’s why DALL·E mini can be much better for creating memes like “Gordon Ramsey throwing a steak into the Large Hadron Collider.”

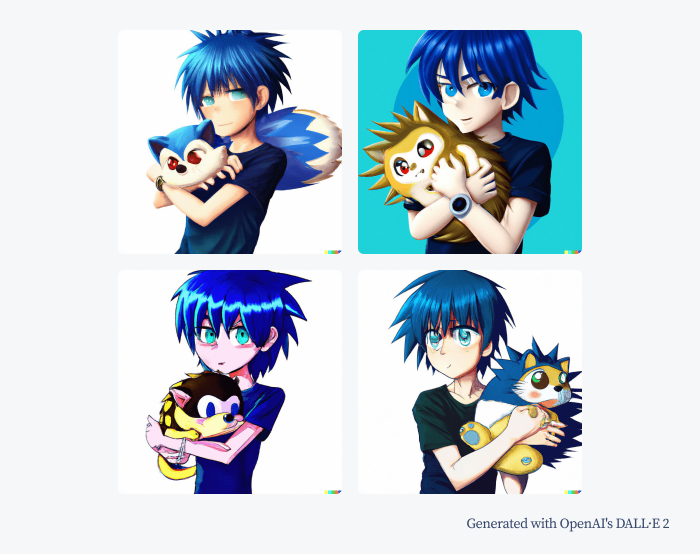

3. Anime cartoons and game characters

It’s obvious that some applications are much better at interpreting prompts than others. Generating an anime boy holding Sonic the Hedgehog like a cat confused the majority of AI text-to-image engines.

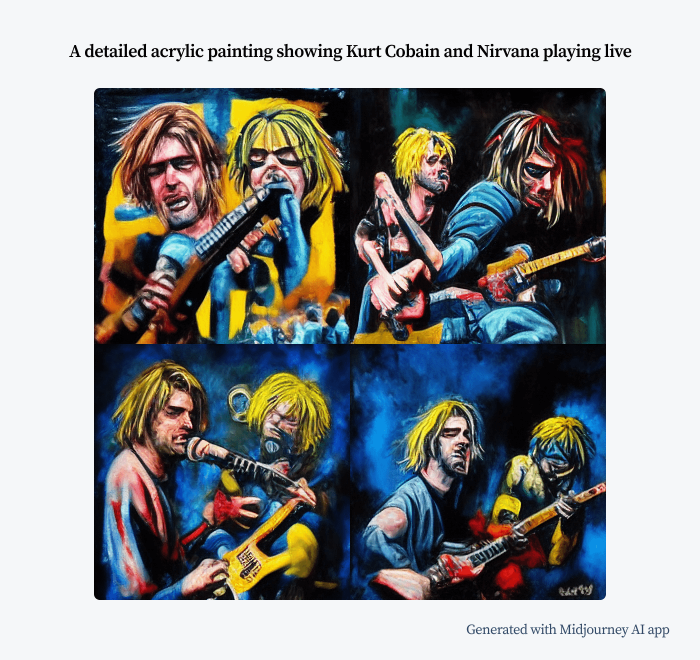

Here are the results produced via Discord with the Midjourney AI app.

It’s clearly a mess. Sonic’s leg becomes the arm of the anime boy. They look like like they accidentally used a teleportation chamber together and were merged.

Let’s see if OpenAI’s DALL·E 2 is up to the challenge.

There is still some confusion about who is who and the qualities such as spiky blue hair are mixed between both characters. Not to mention the cat. Overall, however, the results are much better.

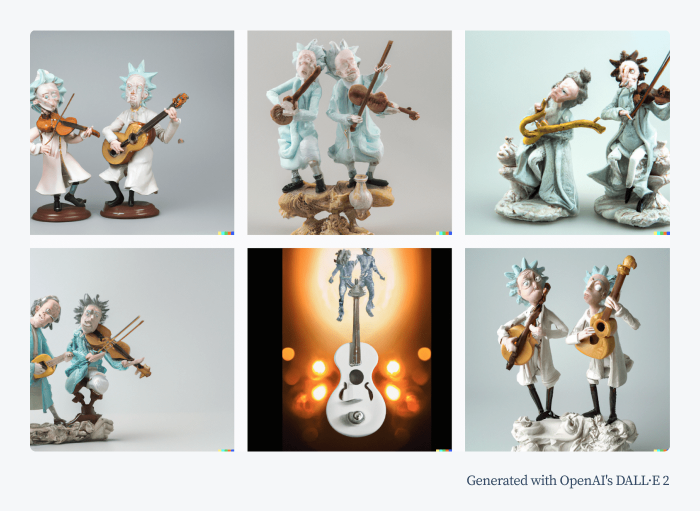

4. Crochet dolls and porcelain figurines of cartoon characters

DALL·E 2 is surprisingly good at imitating textures and materials. It can generate realistic renders of things made of metal, wood, dirt, clay, or fabric. It can also render more creative and unusual combinations, like animals made of glass or characters made of food, like potato Minions.

Here are some crochet dolls depicting characters from a popular cartoon.

And here are some late baroque porcelain figurines showing a character from another popular cartoon tv show.

Arguably, the porcelain should be a little shinier. This is a sort of thing that we can experiment with and adjust by adding more descriptions to our AI tex-to-image prompts. Some of the subsequent examples will show more detailed and precise descriptions.

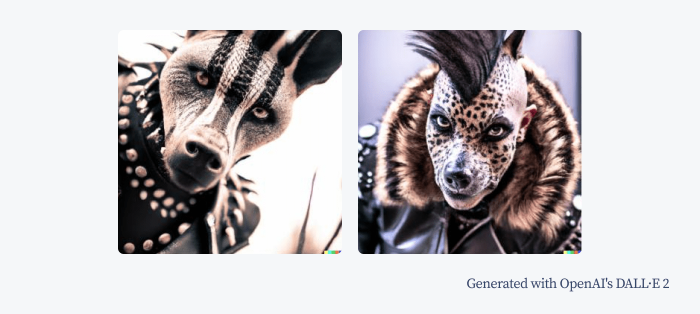

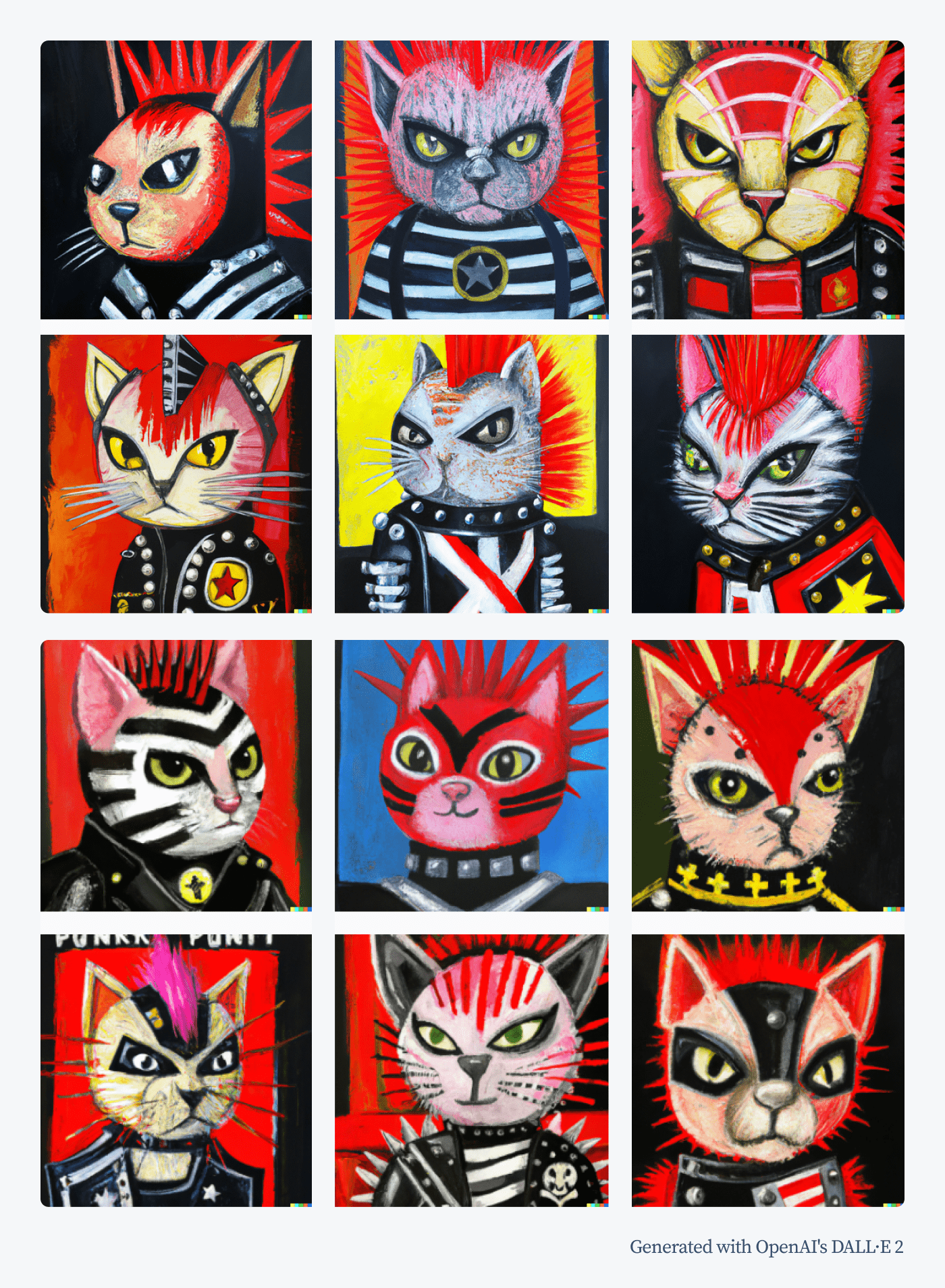

5. Punk rock animals generated with AI

Music seems to be the guiding theme of our AI experiments. Let’s try some prompts that use iconic looks and outfits. To stir things up a little, we’ll generate AI images of animals.

Intense F1.4 50mm high-resolution fashion photo of a punk rock monkey wearing a leather jacket, spiked collar, and mohawk.

And, here is how the technology presented a punk hyena.

A low depth of focus and specific focal length produces a very specific type of photo. However, we can experiment with different painting techniques too.

Here are some acrylic illustrations showing punk rock kitty cats generated with DALL·E 2.

They look pretty cool. It would be extremely easy to turn them into a collection of trading cards or NFTs. However, OpenAI’s content policy explicitly prohibits using DALL·E 2 for creating NFT artworks.

What are some other rules for using the DALL-E 2 generator?

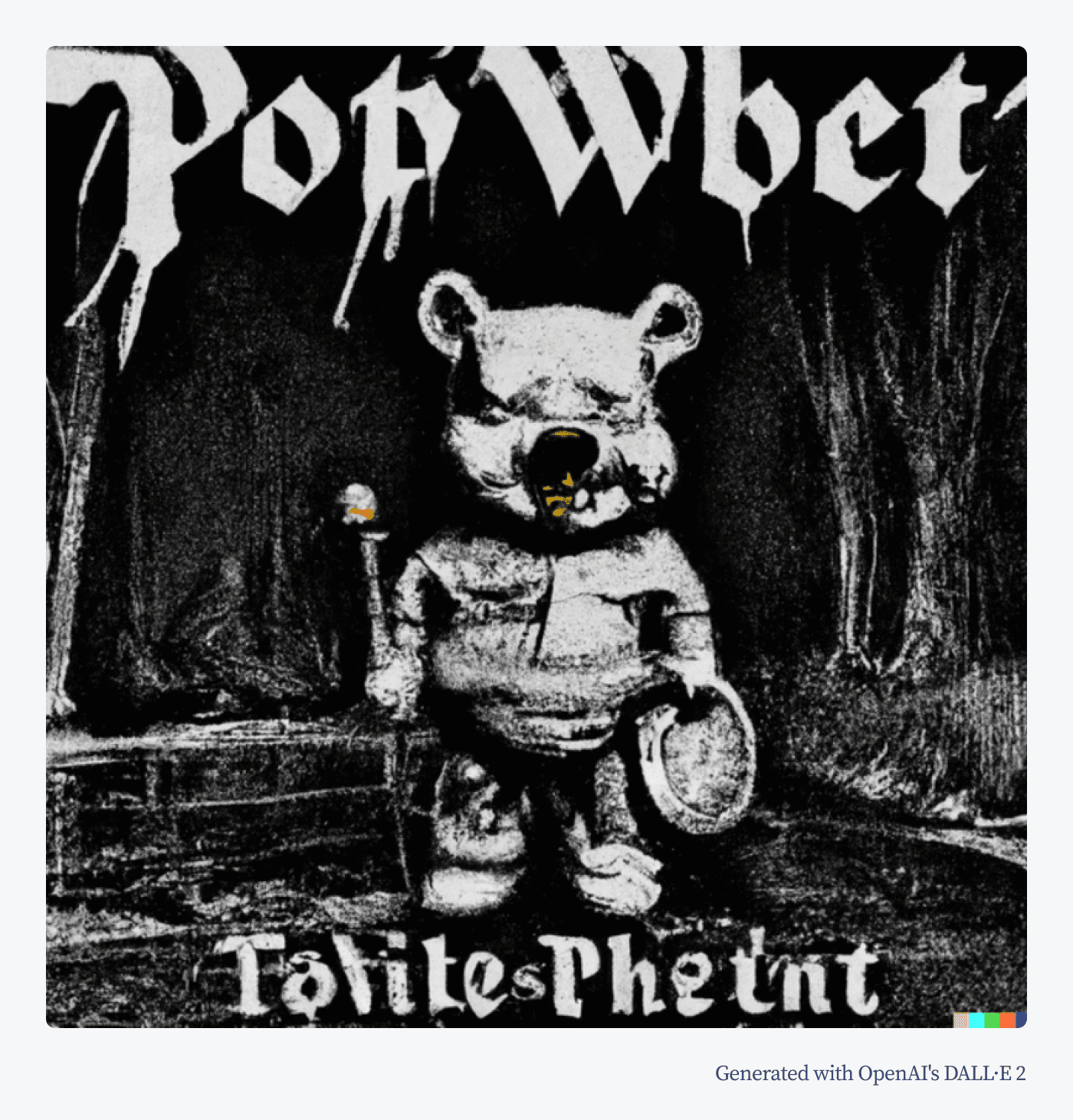

You can’t generate images containing violence, nudity, or other offensive or disturbing visuals. This type of content was excluded from the training data and you can also get banned for using certain words. For example, you can’t use “death” in your prompts. Even if the word is a part of a fixed expression or common name. For this very reason, you can’t generate images from a prompt like “Winnie the Pooh in the style of a death metal album cover.” You have to change it to heavy metal or black metal instead.

Winnie the Pooh in the style of a black metal album cover artwork

Now, let’s get back to our favorite animals.

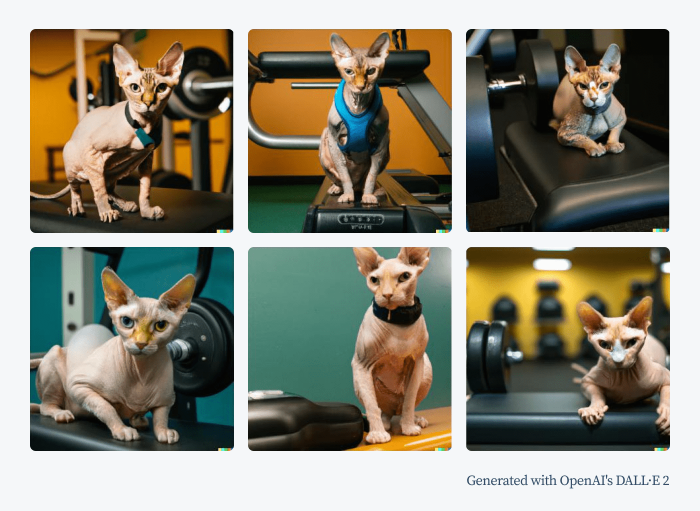

6. Cats hit the gym

You may have seen a viral photo of a “buff hairless cat” who looks like a bodybuilder. Trying to recreate a similar image with AI text-to-image generators was very difficult. For some reason, things like “extremely buff” and “very muscular” didn’t work that well.

While the images look quite convincing and the OpenAI’s app got the “at the gym” part of the prompt, the cats still look skinny.

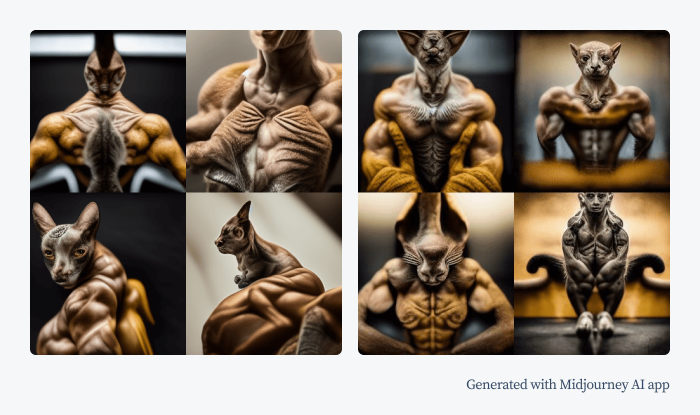

Fine-tuning the prompt produced some interesting results.

But it is still not quite what you would expect.

Interestingly, the Midjourney app generated more over-the-top images. Although it’s hard to call these creatures cats anymore.

The results are somewhat disturbing, but also very intriguing. Midjourney can sometimes generate images that look much more artistic and unusual. Experimenting with different prompts is definitely a means of artistic expression in this case.

We’ve been using a number of different apps so far. However, let’s try to name them one by one. And learn how to create your own AI artwork with text-to-image tools.

Best text-to-image apps and AI image generators

A person who wants to get familiar with AI solutions for image generation can quickly feel disoriented.

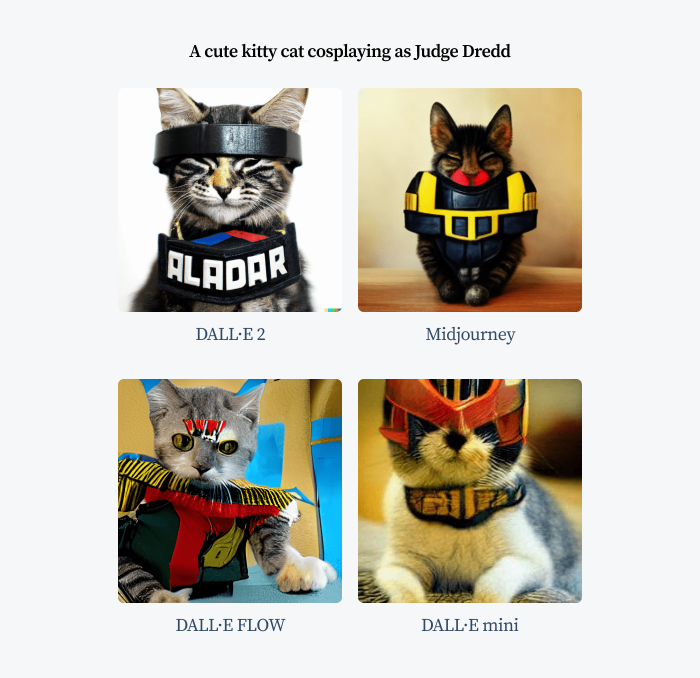

First of all, why are there so many DALL·Es? Which one is the real deal?

DALL·E mini (now rebranded as Craiyon) is the most popular and viral app right now. If you’ve seen bizarre memes on social media showing things like “Saving Private Ryan With the Muppets”, they have probably been made with DALL·E mini. DALL·E FLOW is another project that borrowed the name.

Did you know? The name DALL·E is a reference to WALL·E, a cute robot from the animated movie by Pixar, and Salvador Dali, the surrealist painter.

DALL·E 2 is the actual official app developed by OpenAI. It produces the best results but the access to it is limited. You can join the waitlist but it may take months to get your invitation.

So, the best and most widely used AI tools for image generation right now are:

- DALL·E 2 (OpenAI)

- Midjourney

- DALL·E FLOW

- DALL·E mini

Here is how they compare against each other

Let’s take a closer look at each of them:

1. DALL·E 2 (by OpenAI)

State-of-the-art solution for creating text-to-image renders with AI. You can submit prompts to generate several results. Then you can choose your favorite option and generate additional variants of it. For creating variants, you can also upload any image of your own.

2. Midjourney

Midjourney is a subscription-based text-to-image app that operates via a Discord server. You can access it to watch images produced by other users or submit your own requests via messages. The whole process is very intuitive. You can generate about 200 images for about $10 which seems like a fair price. Just think about it—you can get a new cool poster design for 5 cents!

3. DALL·E FLOW

This AI text-to-image solution can generate very interesting results and it’s completely free. Unfortunately, it’s a bit more difficult to use because you have to initialize the process through a Colab notebook yourself. It means clicking several buttons, modifying the command with your prompt, and running it—all of which are extremely simple to do but can look intimidating.

4. DALL·E mini

DALL-E mini was not created by OpenAI. The name is used by various projects that want to take advantage of its popularity. The fact that the real McCoy is not publicly available makes things easier. Still, this tool is the easiest to use and the most viral among users. You just need to go to the website, enter your prompt, and after about 1 minute you can see your results. And, to be fair, they can be quite hilarious.

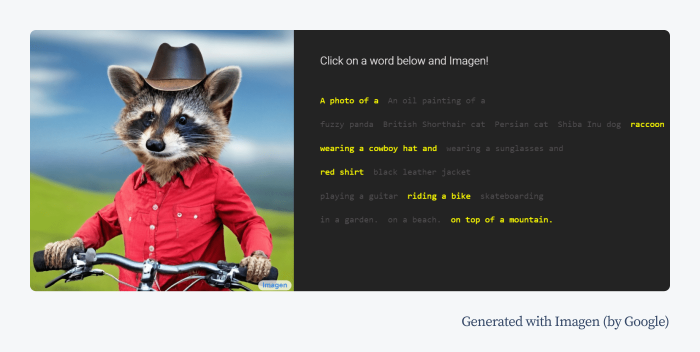

5. Imagen (by Google)

Additionally, Google is working on its own advanced solution for text-to-image using machine learning and diffusion models. This application, however, is currently the most difficult to get access to or try out firsthand. Reportedly, it produces images that can compete with DALL·E 2.

OK—

But even when you do get your hands on one of the apps above, you could probably use some tips. Usually, there are time and generation limitations so crafting better prompts for generative AI from the very start makes sense. If you can try only several prompts per day, you should try to make every one of them count.

How to write text prompts for text-to-image generators?

There aren’t really any specific rules about how to create effective prompts for AI. Different generative AI models focus on different parts of the text. The results can be quite unpredictable, so it’s best to experiment on your own.

We can rearrange the parts of your descriptions and use them interchangeably. Also, by mentioning something multiple times we can put more emphasis on a specific aspect we are interested in.

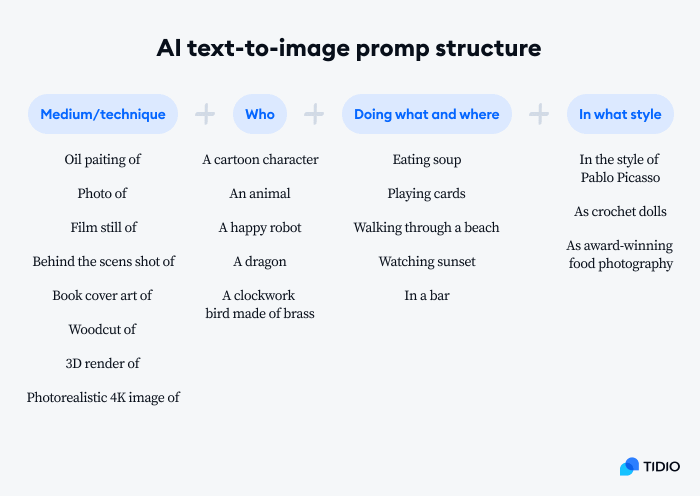

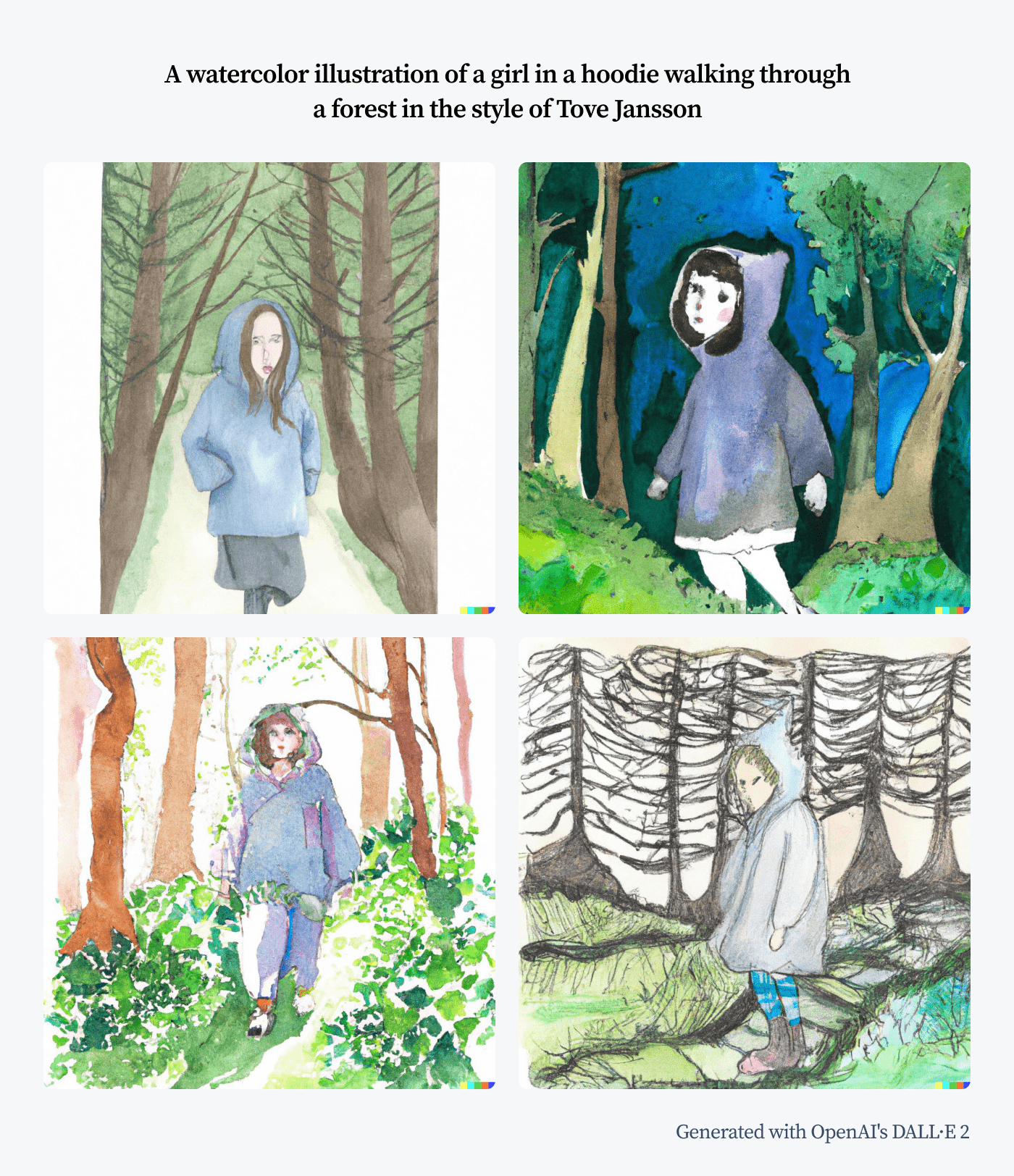

The most universal formula for creating detailed and descriptive prompts would look something like this:

Here is an example:

Looks quite realistic, wouldn’t you say so?

AI-generated art: conclusion

It’s clear that text-to-image tools are becoming more and more popular and for good reason. They can be a lot of fun, and they also have the potential to produce some really great results.

The speed at which AI is advancing can be overwhelming. But instead of worrying it is probably best to focus on how we can use generative AI to boost our creativity.

Fortunately, there’s no need to worry about the death of art just yet. AI text-to-image generators are getting better and better at creating realistic images. But they still have a long way to go before they can create works of art that can rival those created by humans.

References and sources

- Will AI Take Your Job? Fear of AI and AI Trends for 2022

- The New Generation of A.I. Apps Could Make Writers and Artists Obsolete

- Big Tech Is Replacing Human Artists With AI

- Can AI Replace Artists?

- DALL·E 2: Content Policy

Methodology

All of the images created for this article were generated with:

There were no enhancements or alterations.

For the statistical data, we surveyed 842 English-speaking respondents from different interest groups on Reddit focusing on art, AI, and technology.