What is one problem you should keep in mind when researching information on the internet?

Not all sources are reliable.

You need to be careful about the information you find. And make sure to verify it with other sources before using it or passing it on.

When working on a paper or writing an article, we are usually alert to this issue.

But are we equally suspicious if we scroll through social media or casually surf the web on a daily basis?

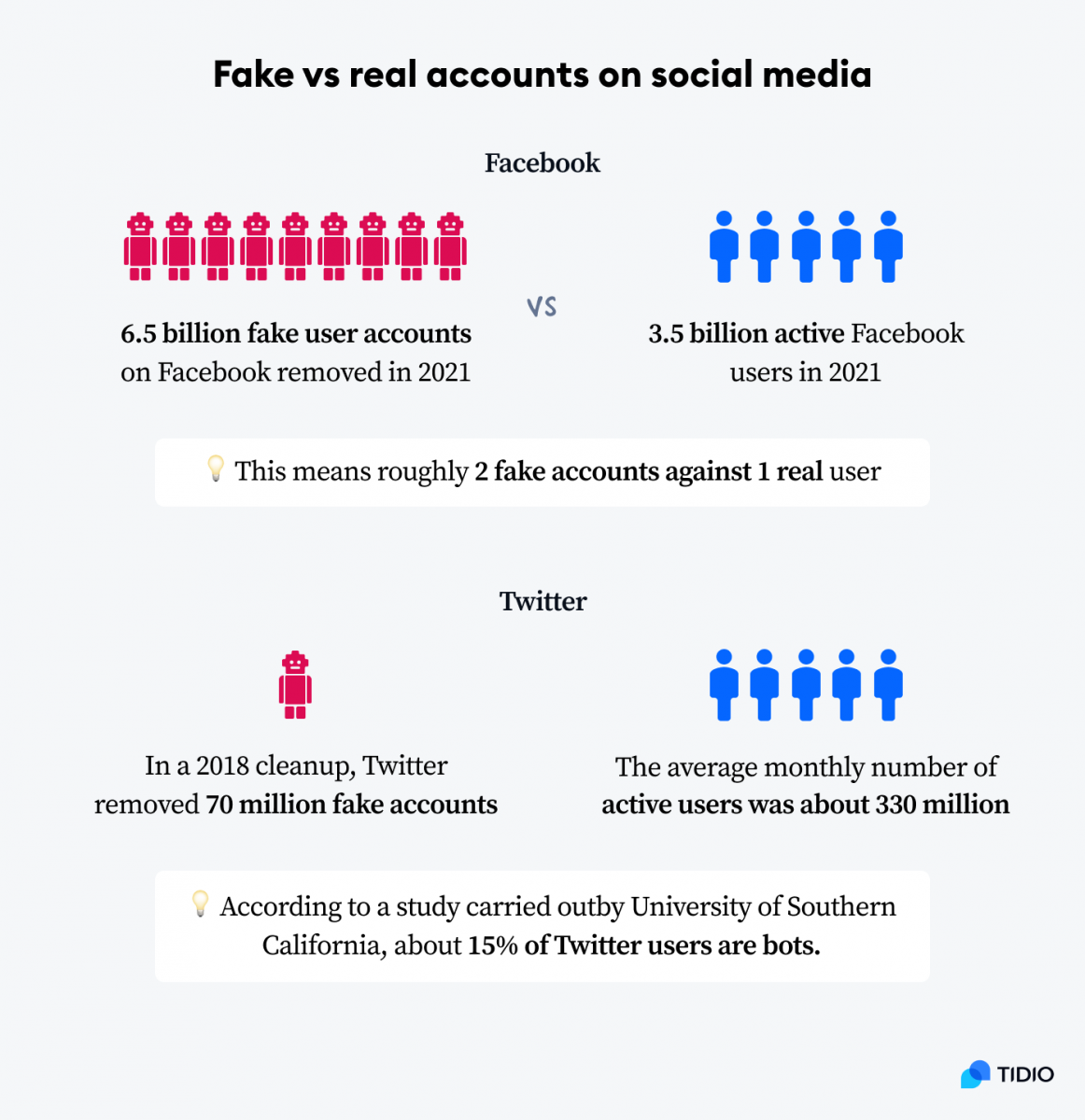

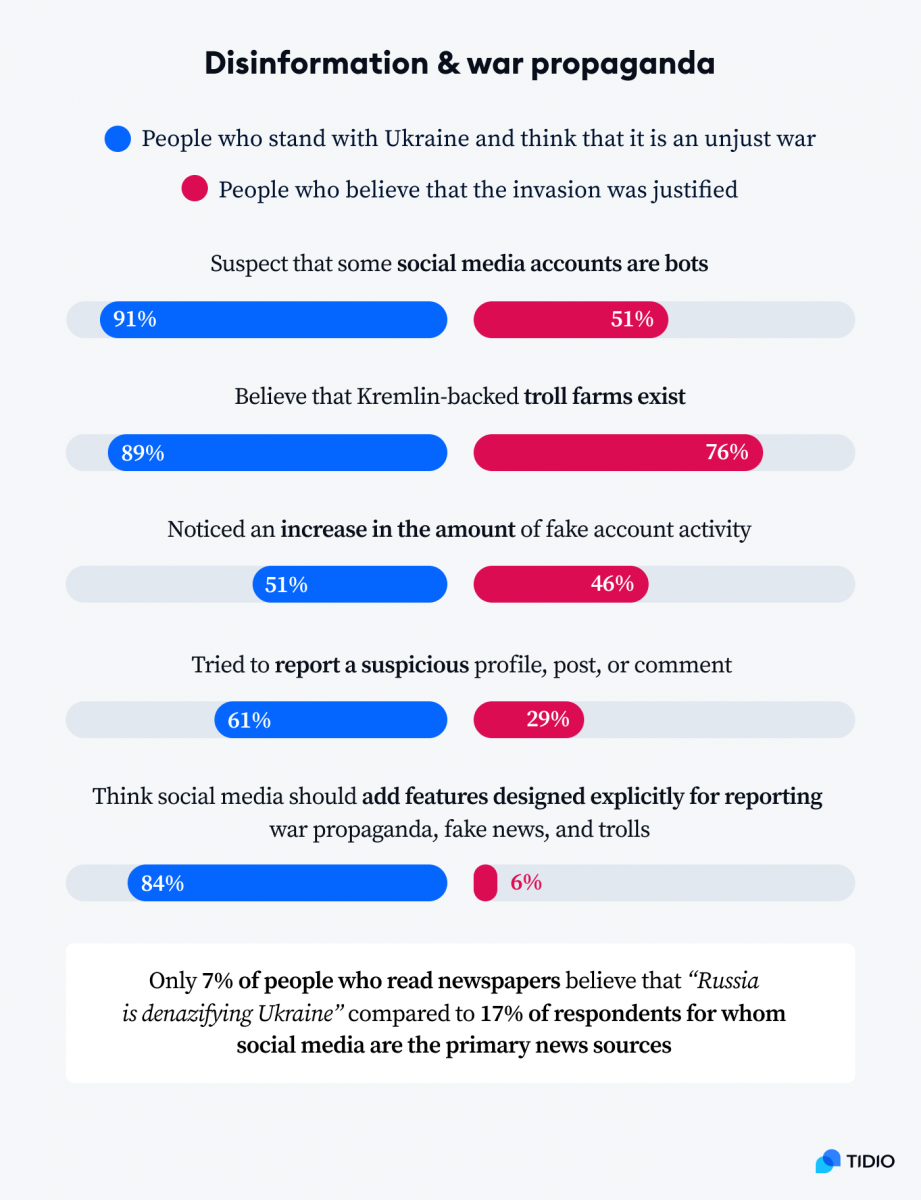

Troll farms and bots posting fake news online are the major sources of disinformation related to climate change and COVID-19 vaccines. Recently, Facebook and Twitter removed thousands of fake accounts targeting Ukrainians by spreading disinformation and Kremlin propaganda.

Truth can be boring and complicated. Disinformation campaigns, on the other hand, prey on our emotions. A study by MIT confirmed that fake news spreads 6 times faster than real stories and is 70% more likely to be retweeted.

If we go online, we should always switch our brains to “fake news detector” mode.

We carried out our own research to see how big the problem is and how people deal with fake information online. Here are the main social media misinformation statistics and fake news facts we discovered:

Let’s first look into what is fake news and define disinformation to get some background information right.

What is disinformation?

Disinformation is false or misleading information that is deliberately spread in order to deceive people. Some examples of disinformation include propaganda, rumors, or hoaxes. Disinformation can be used to influence public opinion or to divert attention from the truth.

The terms disinformation and misinformation are often used interchangeably, but they actually have slightly different meanings.

What is the difference between misinformation and disinformation?

A lot of the time, people spread misinformation because they believe it’s true. Disinformation, on the other hand, is more deliberate and intentional. It is a subset of misinformation in which the deceivers know that they are spreading lies or rumors.

Fake news is another term used to describe deliberately false or misleading information about the pandemic, political news, and much more, that is usually spread online.

Why is fake news a problem? Because disinformation campaigns typically aim to discredit an opponent or to influence public opinion. Disinformation can be used to cast doubt on the credibility of a person or organization, or to make it seem like an opponent’s policies are more radical than they actually are.

Now—

What is the scale of the problem of the disinformation booming industry?

How much of the internet is fake

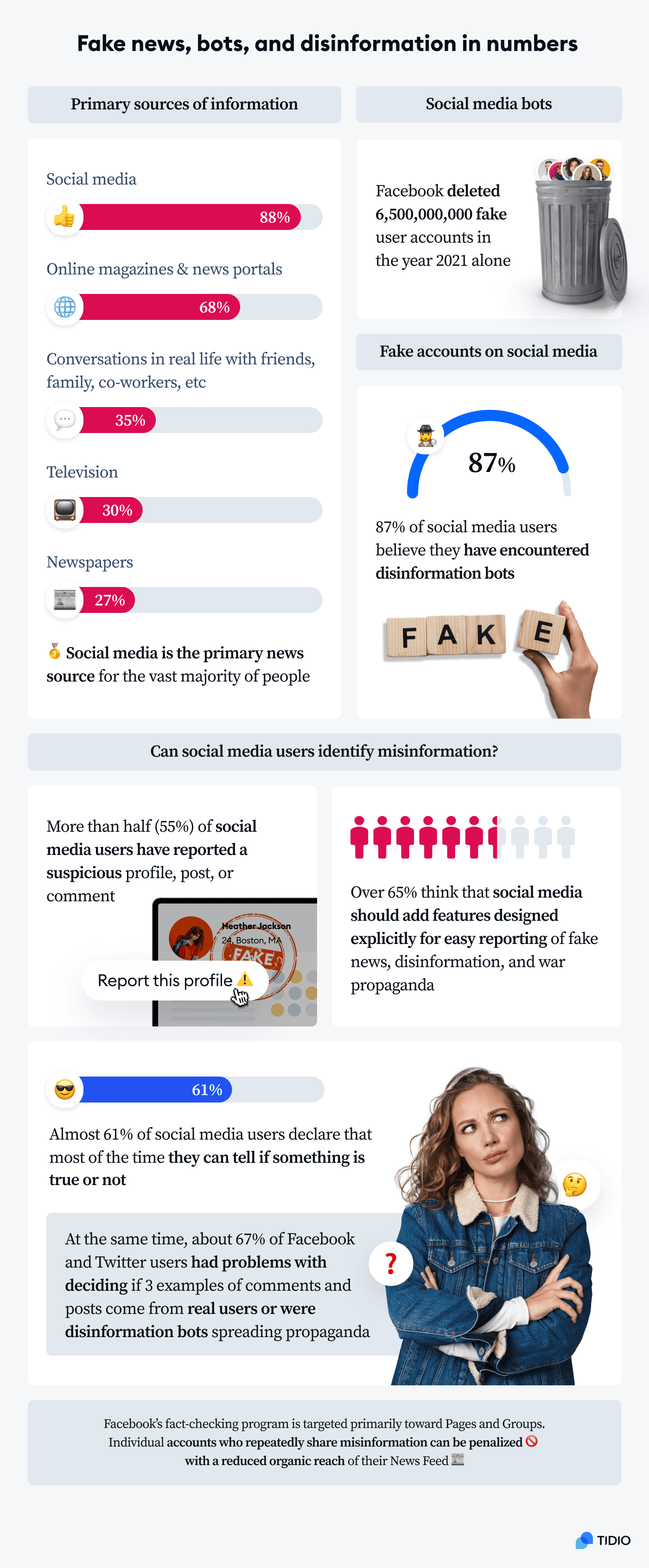

There are billions of social media bots spreading misinformation. Check the infographic below to see the exact numbers and percentage of unreliable information on the internet for Facebook and Twitter.

Read more: Types of Facebook Bots & How to Find Bots on Facebook?

How does fake news affect social media?

They affect the trust that social media users have in the media platforms. Bot farms and social media play a huge role in spreading fake news and disinformation. Platforms like Twitter or Facebook allow users to share stories without verifying their sources. Anyone can share information with a large number of people with several clicks. And many social media news consumers believe what they see online without questioning it.

Examples of fake news on social media include:

- False news stories connected with internet scams and “get rich quick” schemes

- Debunking climate change and environmental concerns related to traditional fuels

- Misinformation related to COVID-19 and vaccinations such as miraculous remedies

- Pushing political agendas or discrediting political opponents

- War propaganda and information warfare

In the context of recent events in Ukraine, it is worth noting how big a role social media disinformation plays.

Where does disinformation come from exactly and how to spot fake news sites?

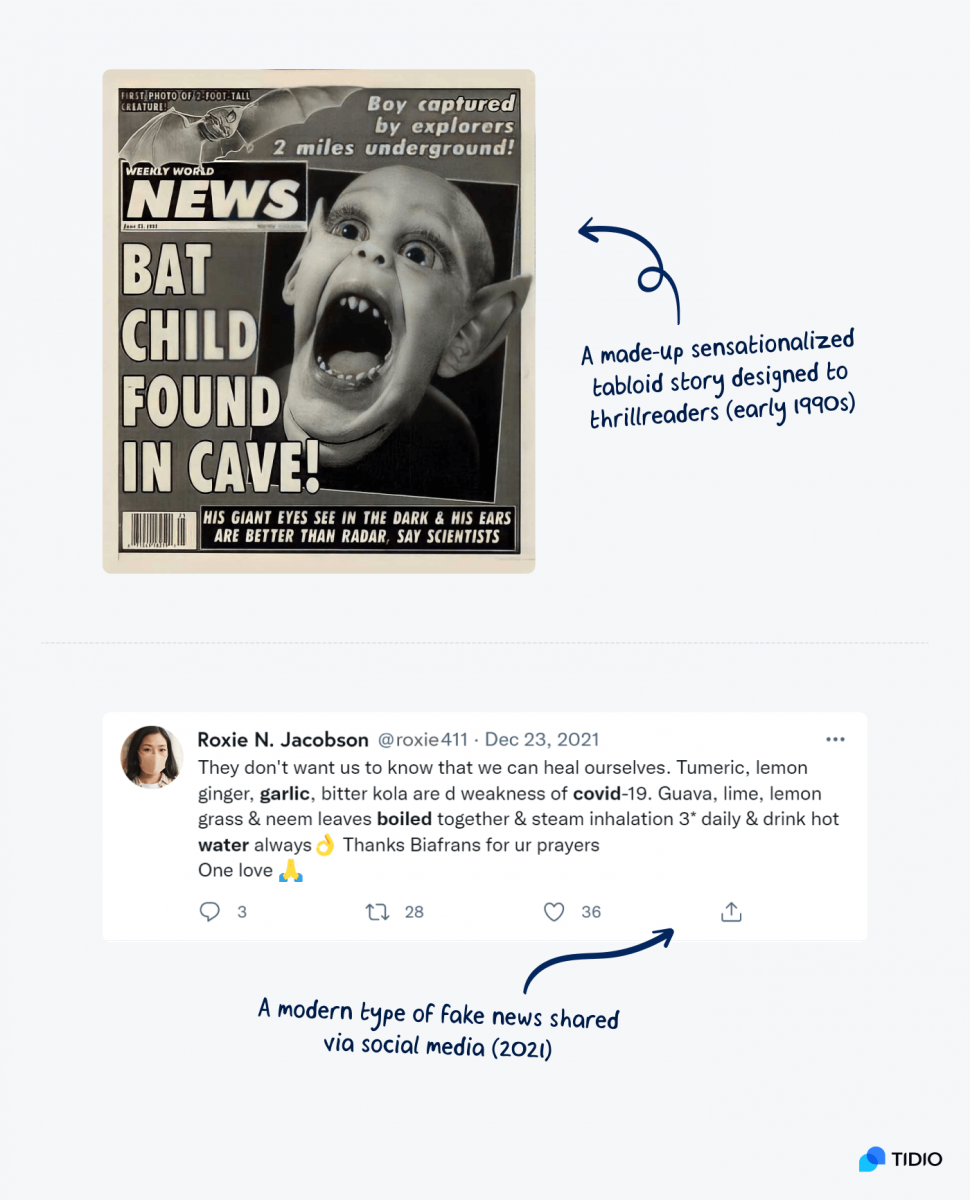

Fake news can come from a variety of sources. For instance, “clickbait” websites deliberately publish sensational or exaggerated stories in order to get people to click on them. Many of these websites make money by running ads, so they have a financial incentive to publish fake news.

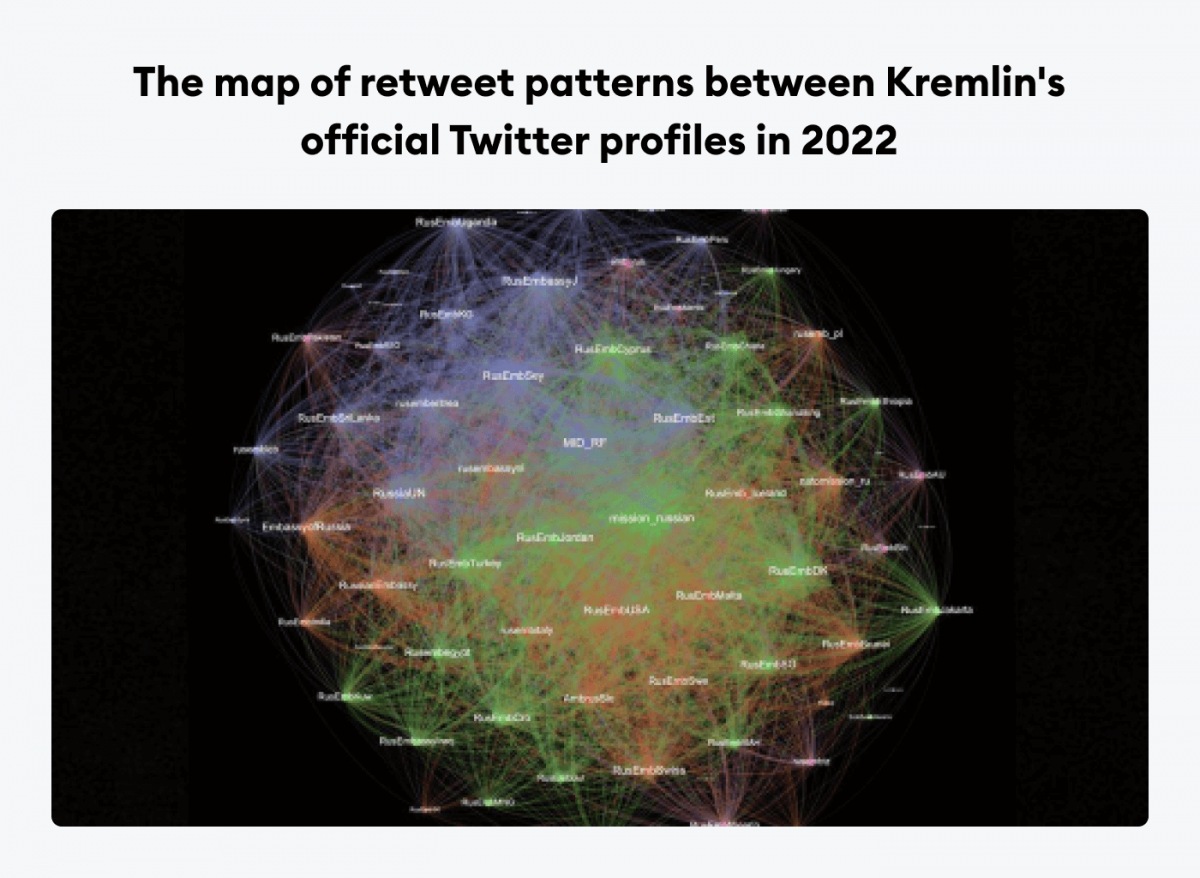

When it comes to social media, however, one of the biggest actors and organized groups that spread online disinformation are so-called Russian web brigades.

The use of social media and online forums by the Russian government has been well documented, and the Russian troll armies are a major part of this. These brigades are made up of paid trolls who post comments and links to pro-Russian articles and videos in order to influence public opinion.

The visualization above shows how pro-Kremlin Twitter accounts mutually retweet each other’s content, including fake news about the war. This network of connections is showing only official government profiles—the very tip of the iceberg.

But who’s responsible for disinformation, and why do people spread fake news?

Who spreads fake news and why?

There are a lot of different reasons someone might spread fake news and disinformation. Some people do it for financial gain, others do it to promote their political or social agenda. Still, others do it just to cause chaos and confusion.

- Scammers. They are interested in financial gains and use false news to promote schemes.

- Professional trolls. Carry out organized operations to influence public opinion and get paid by third parties.

- Politicians. They deliberately spread false information to discredit their opponents and build political support.

- Pranksters. They perpetuate confusion and misinformation because it fuels their excitement.

- Celebrities. They are willing to speak their minds on trending topics if it helps them gain popularity.

- Conspiracy theorists. They are ready to publish online news that shows they are edgy and enlightened.

The last group, conspiracy theorists, is quite an interesting case. It may consist of more people than we commonly think.

A study by Toby Hopp revealed that people who lack trust in conventional media are more likely to share disinformation. There was a strong correlation between political views and the amount of fake news shared online. These fake news statistics showed that social media users at the far ends of ideological extremes, both left and right-wing, shared false information more frequently than the rest.

They [conspiracy theorists & people with strong political views] commonly enforce a worldview comprised of two groups of people: those in the know (a small group of people, such as readers of the site) and “sheeple” (everyone else, and especially those who populate the dominant political sphere.

For many people, having access to “secret” knowledge produces a sense of superiority. The very fact of “not following the herd” is seen as a virtue. At the same time, users who spread misinformation are not interested in fact-checking because the goal is not the truth as such. It is all about being in opposition to the commonly accepted consensus.

When it comes to the booming disinformation and deliberate mass distribution of misinformation, most false posts and comments are spread by professional trolls. Many of them operate in Third World countries.

Recently, MIT Technology Review published a report with some wide-opening statistics. In the run-up to the 2020 Republican vs Democrats election in the United States, content produced by troll farms on Facebook may have reached almost 140 million per month. Many of these fake accounts were responsible for astroturfing. Twitter bots also played a significant part in Trump’s presidential election campaign.

What is Astroturfing?

Astroturfing is the practice of masking the sponsors of a message or organization behind it. It is a way to influence public opinion by creating the illusion of a grassroots movement. Astroturfing can be used to promote or discredit a particular idea, person, or product. Astroturfing is often used in politics, but it can also be used in business or marketing.

So, we already know what disinformation is and who’s responsible for it. Let’s look at some misinformation statistics and how fake news spreads.

Reasons why misinformation and fake news spread

Misinformation and fake news are nothing new, but they seem to be spreading like wildfire in recent years. So, what’s behind this phenomenon? Why do these false stories spread so quickly, and why do people believe them?

There are several factors at play:

- Technology. The internet has made it easier than ever for misinformation to spread. With social media, we can share news stories and articles with just a few clicks, and they can quickly go viral. There are no fact-checking processes involved.

- Confirmation bias. People are more likely to share information that confirms their own views. We tend to look for and trust information that supports our beliefs and ignore information that doesn’t. It can lead to us sharing inaccurate information online without realizing it.

- Social factors. Social media users are more likely to trust information that comes from their friends and family members. Even if it is a cousin of a friend of a friend. We tend to believe information that is shared by people we know and trust, even if it’s not 100% accurate.

- Clickbait content. Misinformation and fake news can often be sensationalized or presented in a way that is designed to grab people’s attention. Entertaining news is more shareable than real stories, which usually tend to be more nuanced.

But why is fake news dangerous?

Because some internet users rely primarily on social media outlets for their news. In fact, Pew research shows that 18% of U.S. adults only use social media for their news consumption. And an example of an impact of fake news is undermining the government’s words, potentially leading to disruptions in the real world.

So, can we stop it? Or at least slow it down?

How to stop fake news from spreading

There are a few things you can do to help stop the spread of fake news. First, be skeptical of everything you read online, and check the source before sharing it. If you see a story that looks too good (or bad) to be true, it probably is. Second, only share stories from reputable sources. And finally, if you see a story that you know is fake, report it to the website or social media platform where you saw it.

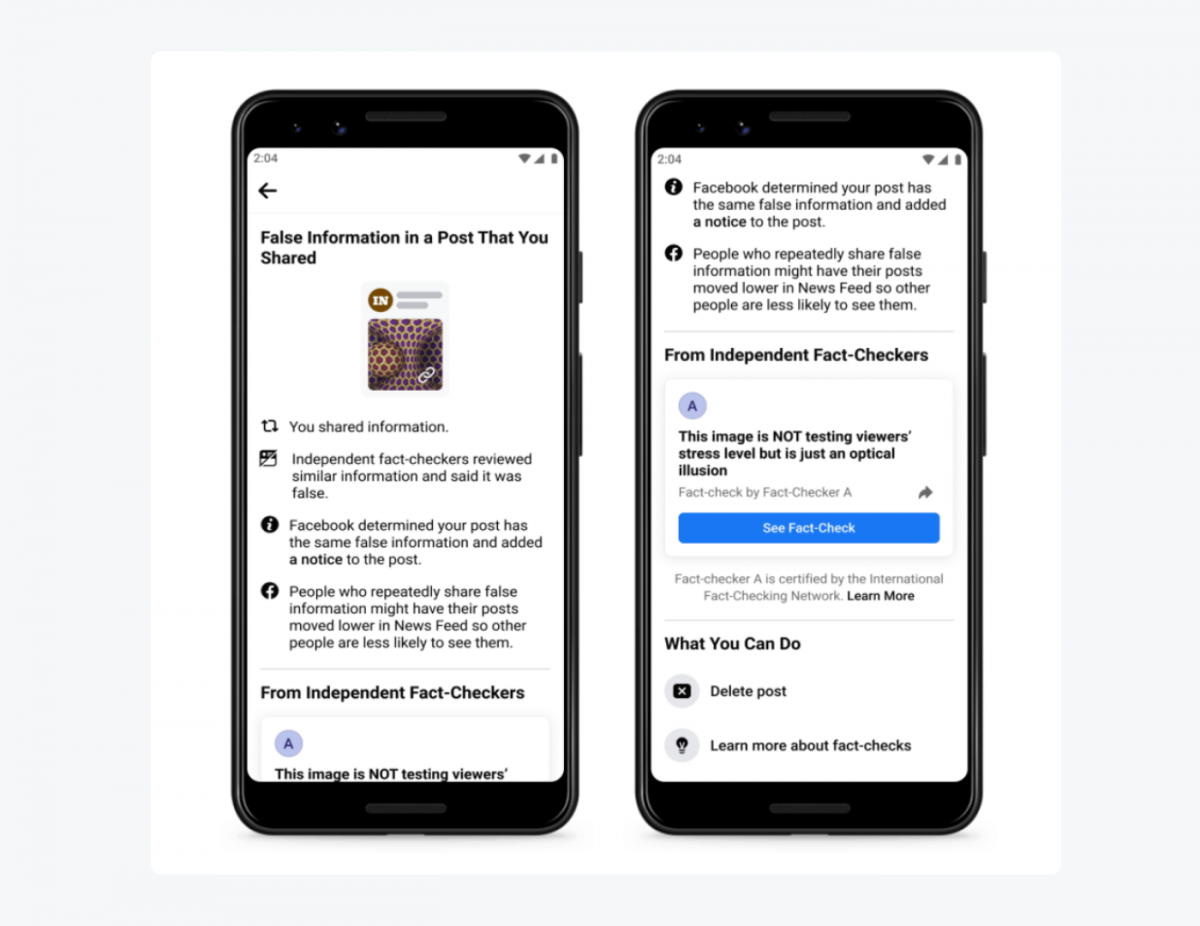

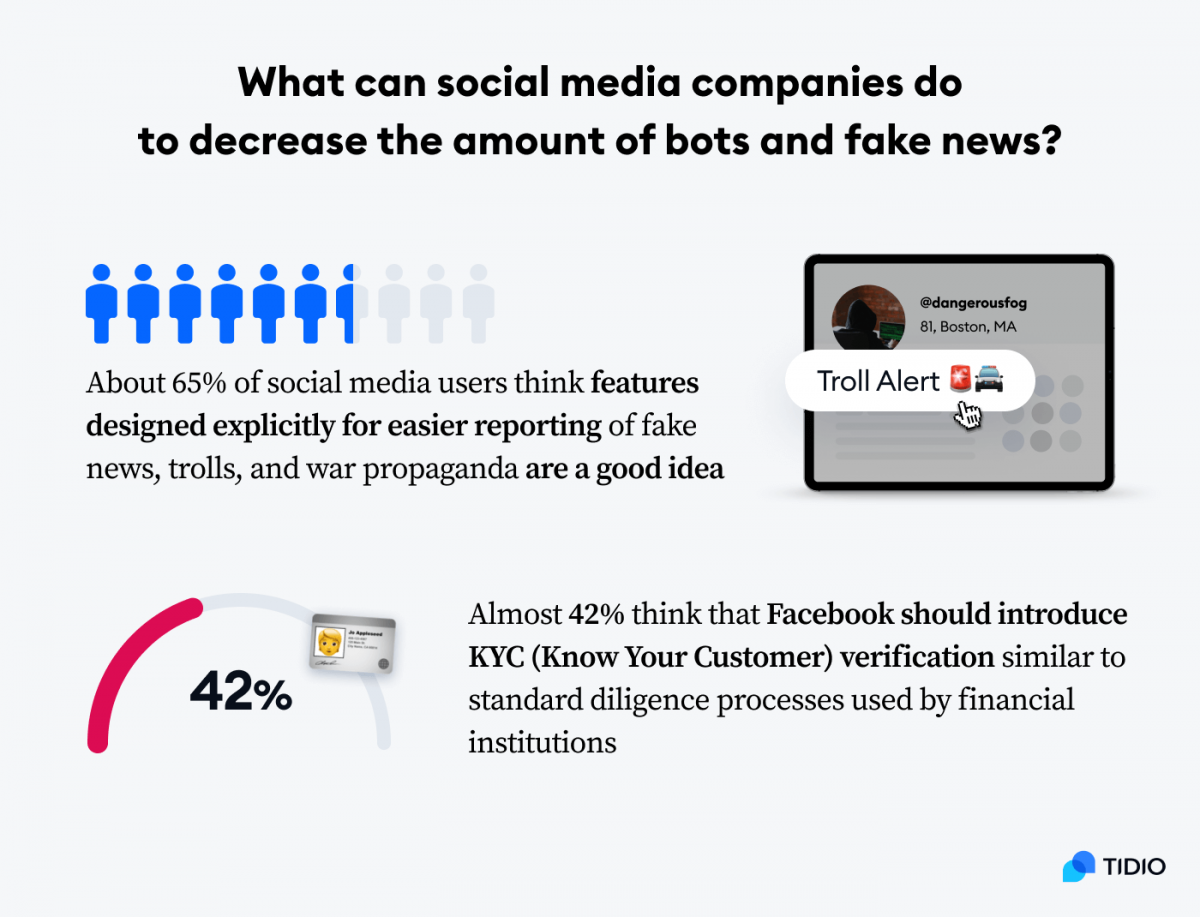

A great deal depends on the steps taken by tech companies and the platforms such as Facebook, Instagram, and Twitter themselves. Decreasing the number of fake news can be achieved by making it easier for users to report fake news. And by having teams of fact-checkers who know how to detect fake news and can verify stories.

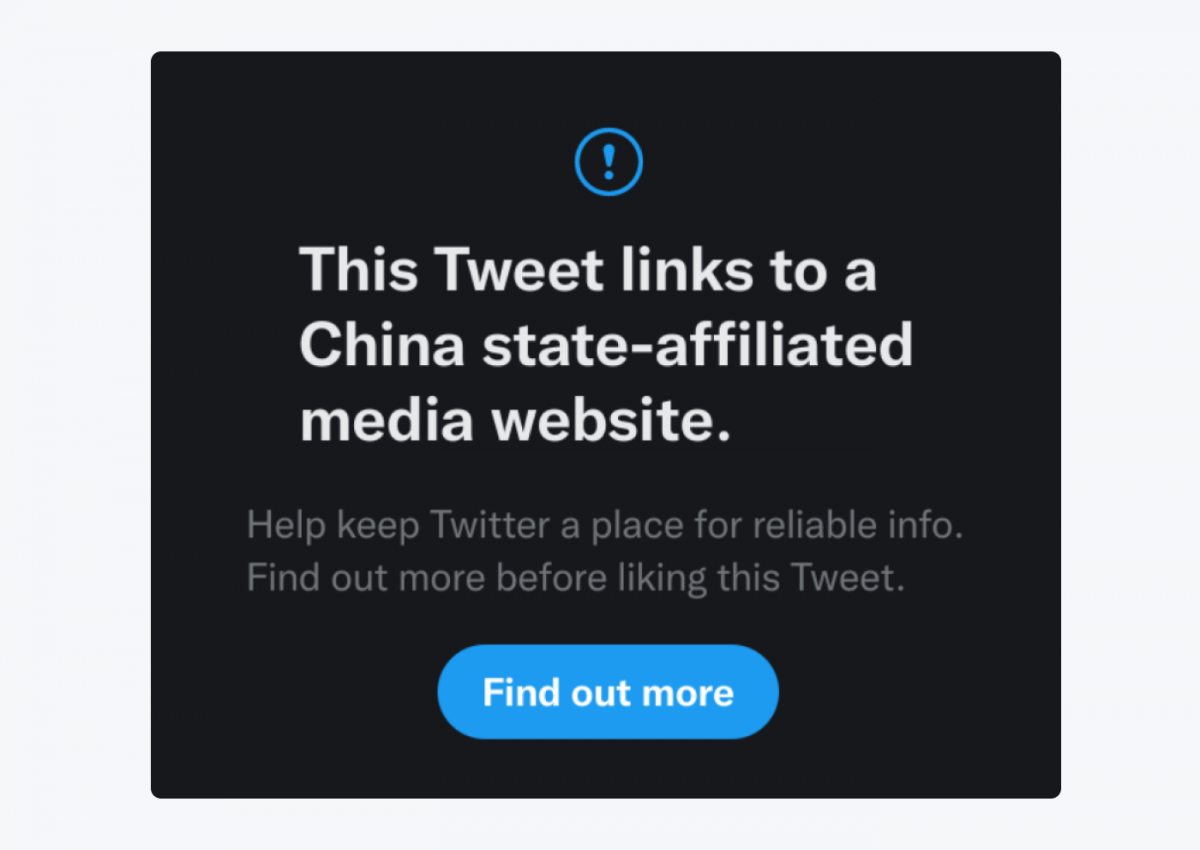

Facebook and Twitter are aware of the problem. Their stance on disinformation is transparent, and they introduce new features that help to fight fake news stories.

One of the problems is that social media can’t stop spreading misinformation without compromising their user privacy. On the one hand, better user verification would reduce the number of bots and fake accounts, while making Facebook fake news detection easier. However, only 42% of users think that ID-based user verification would be beneficial in the long run.

It should also be noted that fact-checking, at the end of the day, can be a form of censorship and limitation of free speech.

An overly aggressive approach towards removals would have a chilling effect on free speech […] I personally believe we’re better off as a society when we can have an open debate. One person’s misinfo is often another person’s deeply held belief, including perspectives that are provocative, potentially offensive, or even in some cases, include information that may not pass a fact checker’s scrutiny.

Additionally, some argue that “social” problems shouldn’t be addressed with “technological” solutions. Digital media literacy can be more important than algorithm updates. Educating your friends and family on how to identify fake news and taking a more critical approach to the information we see online is the best thing you can do. There are some steps you can take to make it easier.

How to identify fake news and disinformation

One of the important facts about fake news is that sometimes it can be impossible to decide if something is disinformation or not. There are no fixed rules. It is hard to distinguish between a bot that launches a disinformation campaign and gullible users who unwittingly share it with good intentions in mind.

However, there are some giveaways that you can spot easily that should give you a hint when trying to spot misinformation in the media.

So, how to spot a fake news site? Well—if you follow the five steps below, you should be able to judge if the tweet or Facebook post you have just encountered is true or not.

Step 1: Analyze the wording and look out for red flags

The most important thing is to check sources, obviously, but the first thing is something you can do yourself and immediately.

Read the post or comment carefully and look for some common patterns.

Does it use typical phrases that are meant to boost the strength of the message and increase its credibility?

Here are some red flags and typical phrases used in the biggest fake news stories:

- It happened to my husband/work colleague/brother-in-law’s cousin/friend…

- They don’t want you to know the truth…

- Please, share it before they try to delete it/shut it down…

- It hasn’t been confirmed yet, but I’m sharing it just in case (it turns out to be true)…

- I know it sounds too good to be true but…

- The government and the media are trying to cover it up…

Credibility is usually built by referring to a specific situation that happened to someone close or to an “insider.” Then, disinformation bots try to convince you that this is exclusive information. Probably the government/media/”they” don’t want you to know about it and are “hiding the truth.” The rest of the message is usually supposed to encourage you to share the news quickly before it disappears.

Read more: Find out how AI can spread fake news in our research on AI hallucinations.

Step 2: Cross-reference the info with trustworthy sources

These days, information circulates much faster than before. If something happens to be true and newsworthy, then the chances are that established media outlets have reported it already. Therefore, when spotting fake news, try to check Google News before sharing a post or retweeting something.

Here are some reputable (and unreputable) real news outlets:

It is also a good practice to see what position expert organizations are taking on a matter in question. For example, if we suspect that something related to public health and medicine is false, we should check what the official WHO’s position is.

Step 3: Visit the profile of the person who shares the information

It’s almost hard to believe how much fake news is spread on social media by fake accounts. So, when you see something online, make sure to visit the person’s profile to check if their post is real or just one of the disinformation on social media.

The credibility of a post or comment can often be evaluated by visiting the user’s profile. A lot of times, spammers, trolls, or people with fake profiles will not have much information on their profile, or they may share very little content unrelated to the agenda they are pushing.

Some things you should pay attention to are:

- Many posts that are persistently trying to push a very particular theory or opinion

- Posting from different countries and in different languages

- No credible information about education or employment

- Profile pictures that don’t show their face (or profile photos that use AI filters)

- Lots of pictures with children and animals (a common technique used to make profiles look more real)

- A name that looks made up, doesn’t really match typical names for a given region or is abbreviated to initials, etc.

- A low number of followers or followers who also look like fake accounts

Keep in mind that it’s easy to generate deep fakes and images that look like real people. You can use GAN software to create realistic-looking images of people. Combined with programs like Photoshop, this can be used for a variety of purposes, such as creating fake social media profiles or making believable hoaxes.

Read more: Human vs AI Test: Can We Tell the Difference Anymore?

Step 4: Use additional verification tools

There are many online fact-checking tools such as the Fake News Detector developed by MIT’s McGovern Institute for Brain Research. Many of them use AI and machine learning, but are still in an early phase of development. Right now it is better to focus on verifying information through fact-checking websites.

Some websites for fact-checking, nonprofit companies, and news organizations include:

- FactCheck.org

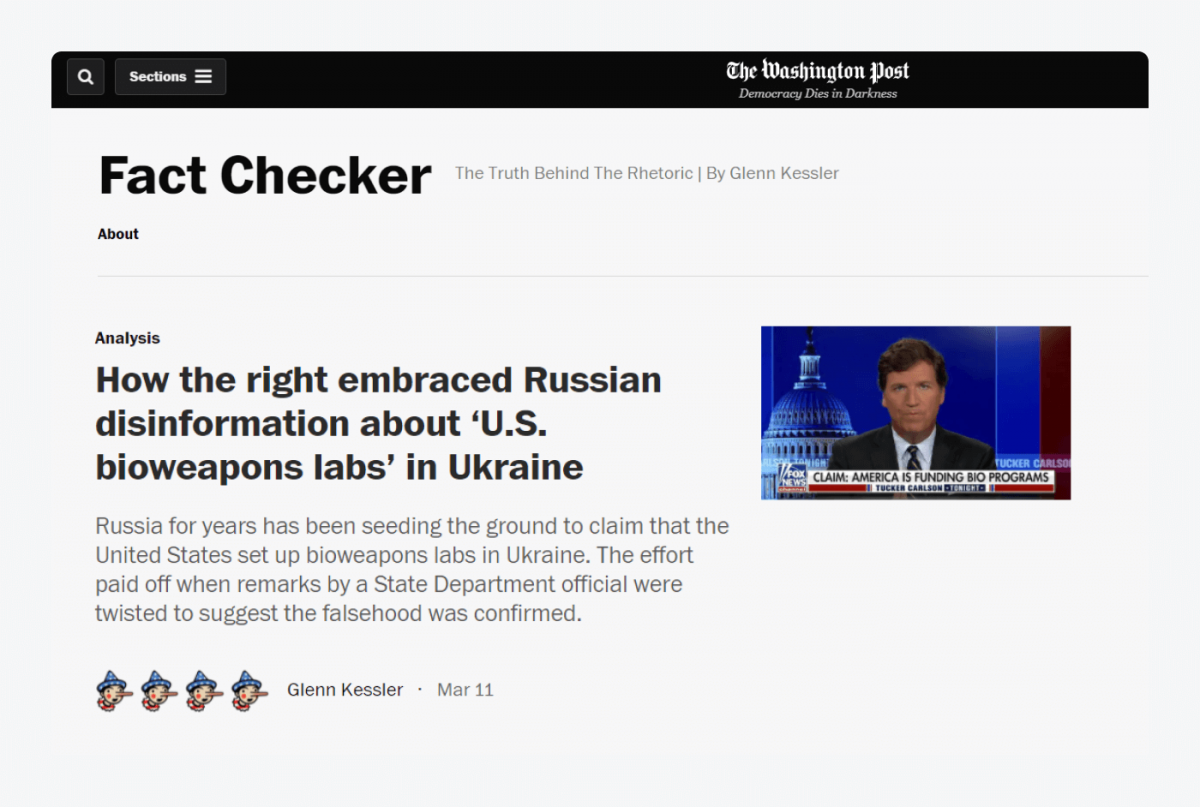

- Washington Post Fact Checker

- PolitiFact

- Snopes

If you want to analyze some elements of suspected fake news, you can also use apps such as Google Lens. Very often, fake news is illustrated with old photos from uncredited sources. You can use reverse image search technology to find if a photo had been published before. The original context frequently turns out to be completely different.

This example of fake news was debunked by reverse image search based on individual frames of the video.

Step 5: Report posts or profiles using the appropriate policy

If you are still having trouble identifying whether you are dealing with disinformation, it is very likely that you are. It’s a good idea to signal your concern and report a post, comment, or profile that you think is suspicious to spot the effects of fake news.

Facebook and other social media can use the information you provide to help track down the creators of fake bogus accounts. Sometimes this also means bringing them to justice. Troll rings are eliminated and closed down all the time. So, if you see something that looks suspicious or fake, be sure to report it!

Conclusion

It can be difficult to determine whether something is fake news, but by following these simple steps, you can make an informed decision.

- Check the source of the information

- Visit profiles of users who share it and see who they are

- Try to determine the agenda behind specific posts

- Employ fact-checking websites and tools

- Verify images and multimedia

And remember that reporting any suspicious posts or profiles is an excellent way to fight back and combat disinformation. We can work together to keep our social networks safer and slightly more trustworthy.

Misinformation is becoming more than just an annoyance—it can have serious consequences for both democracy and world security. It’s no longer just about people sharing fake stories on social media. We need to invest in media literacy education so that people can better identify disinformation and protect themselves from it.

Sources and useful tools

- What Facebook Did to American Democracy

- Facebook Reports: How Many Fake Accounts Did We Take Action On?

- Study: On Twitter, False News Travels Faster Than True Stories

- Online Human-Bot Interactions: Detection, Estimation, and Characterization

- Why Do People Share Ideologically Extreme, False, and Misleading Content on Social Media?

- Troll Farms Reached 140 Million Americans a Month on Facebook

- Coronavirus: The Seven Types of People Who Start and Spread Viral Misinformation

- How to Encourage Family and Friends to Stop Spreading Misinformation on Social Media

- The Global Business of Professional Trolling

- Perspective: Tackling Misinformation on YouTube

- FactCheck.org: A Project of The Annenberg Public Policy Center

- Fact Checker: The Truth Behind The Rhetoric

- PolitiFact: The Poynter Institute

- Snopes: The Internet’s “Definitive” Fact-Checking Resource

Methodology

For this study about misinformation, disinformation, and fake news, we collected answers from 470 respondents on Reddit. The survey used multiple-choice questions and Likert scales.

Fair use

Has this article and our research helped you learn more about identifying fake news? Feel free to share it or quote the statistics. Just remember to mention the source and include a link to this page. Thank you!