In November 2022, OpenAI did a “low-key research preview” of ChatGPT.

What seemed to some as “just another fun bot to play with” has completely revolutionized the tech industry. And most likely the future, too.

ChatGPT entered human conversations like an unexpected guest at a dinner party, quickly seizing control and leaving everyone astonished.

True, there were plenty of hiccups, false alarms, and “I’m sorry, I don’t know”s, but time passed, and the world now has access to a pocket PhD in all disciplines.

Let’s reconstruct the events that made more than 100 million people adept at AI in less than a year and see how it changed us as a society.

A year of AI: main findings

We asked almost a thousand people (896, to be precise) to share their experience with generative AI, their hopes and fears for ChatGPT (and whether they came true), and their opinions regarding where this whole AI hype is going to take us. The results can give us an insight into where humans fit into the new world of robots.

It’s fair to say that some of the findings managed to amaze us. For example, can you imagine that as many as 80% of people support laws and legislations governing the use of AI? It seems like we’ve learned a lot in a year.

Here are some key findings:

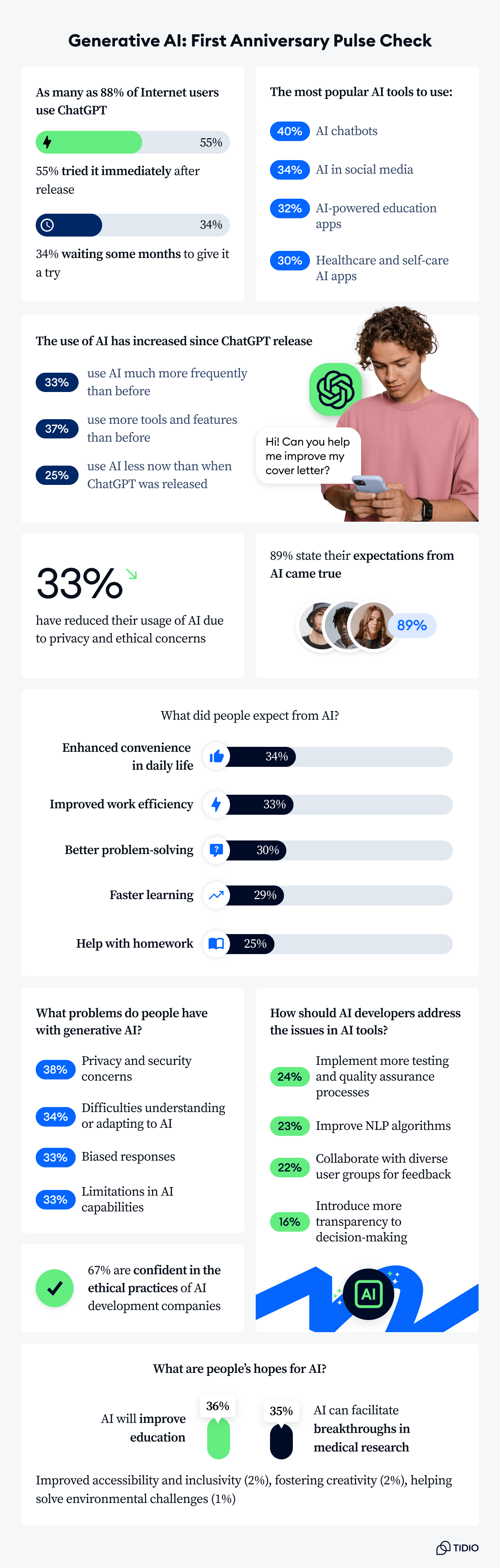

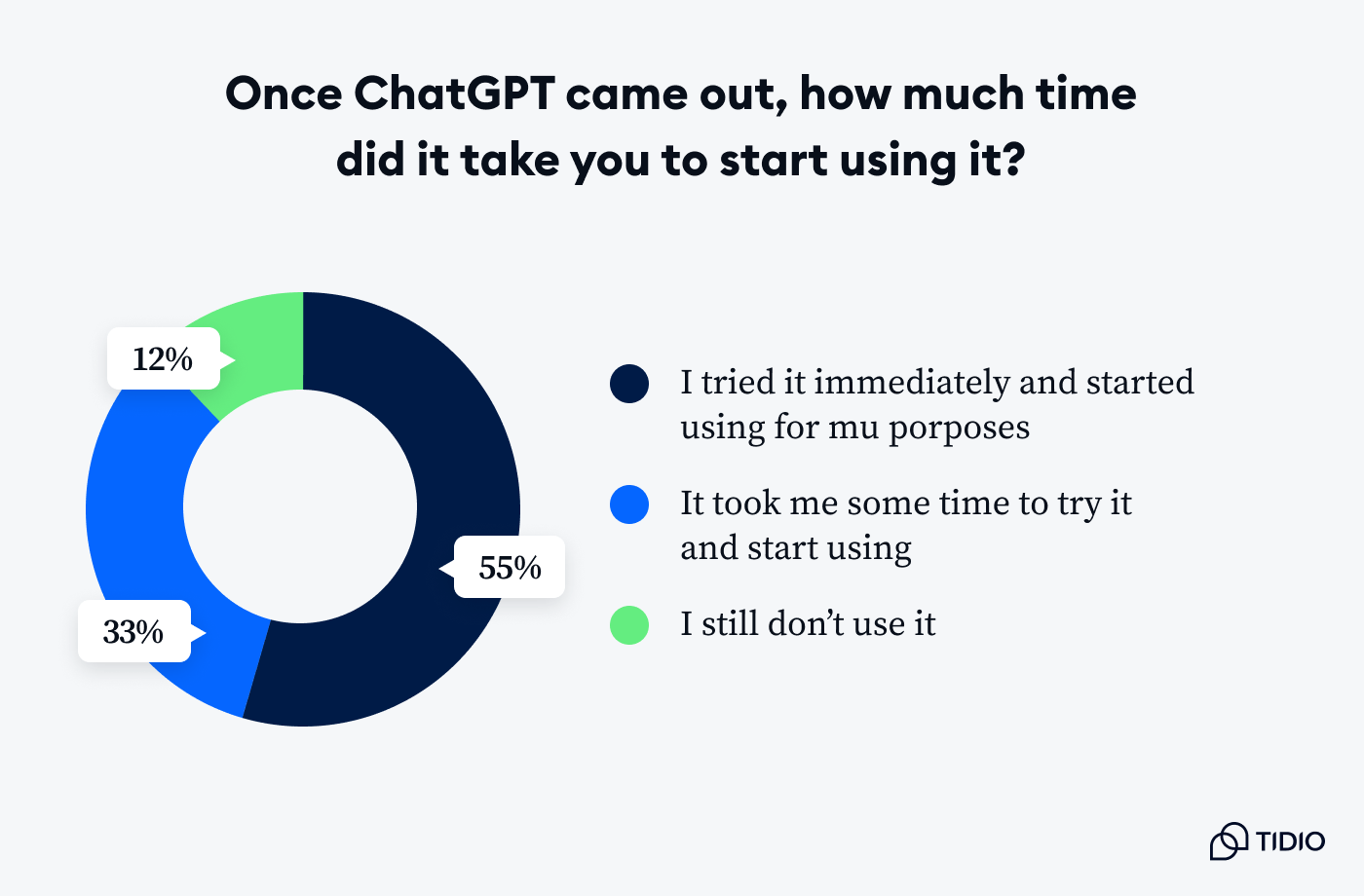

- As many as 55% of respondents tried ChatGPT immediately after it came out, while about 34% took their time and waited before giving it a go

- A whopping 88% of people use ChatGPT: of them, 47% do so occasionally, 32% daily, and 20% rarely

- AI chatbots are the most popular AI tools to use: 40% of respondents interact with them regularly. The next in line are AI in social media (34%), education apps (32%), and healthcare AI apps (30%)

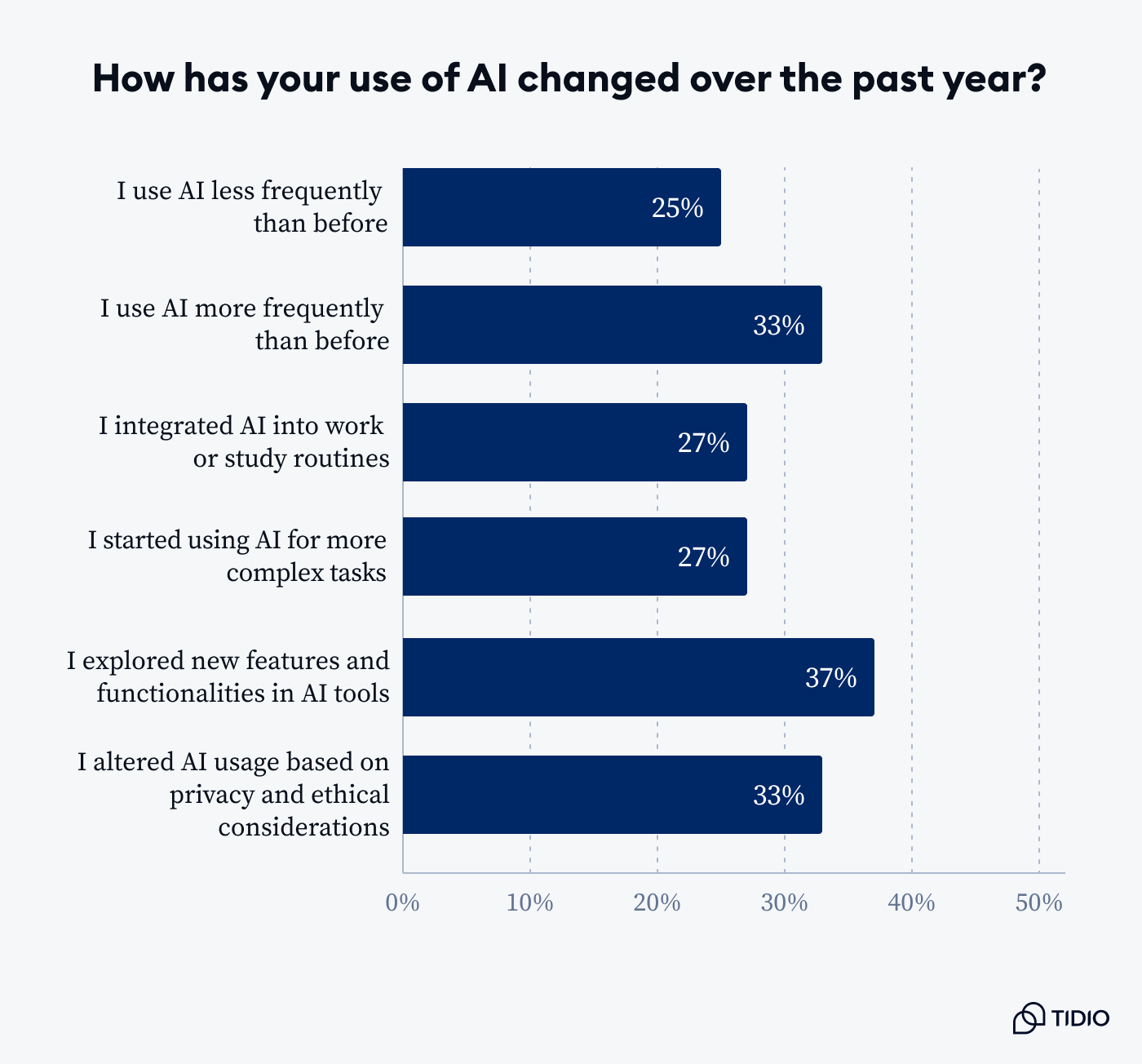

- A third of the respondents (33%) use AI much more frequently than when ChatGPT just came out, while 25% use it less often. About 37% increased the number of features and tools they use

- As many as 33% have altered their usage of AI due to privacy and ethical concerns

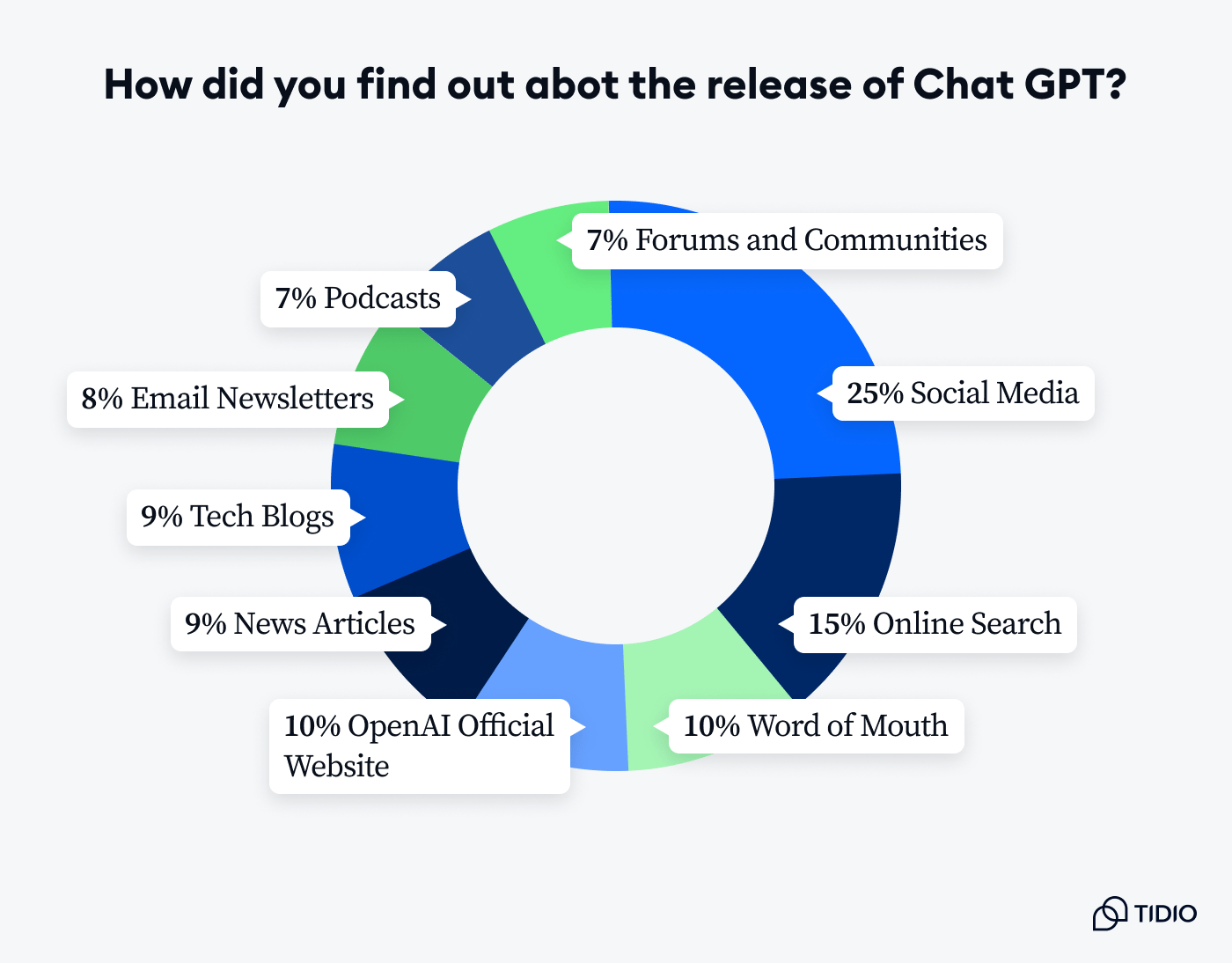

- Around 25% found out about the release of ChatGPT from social media, 15% from search engines, 10% from word-of-mouth, and another 10% from OpenAI official website

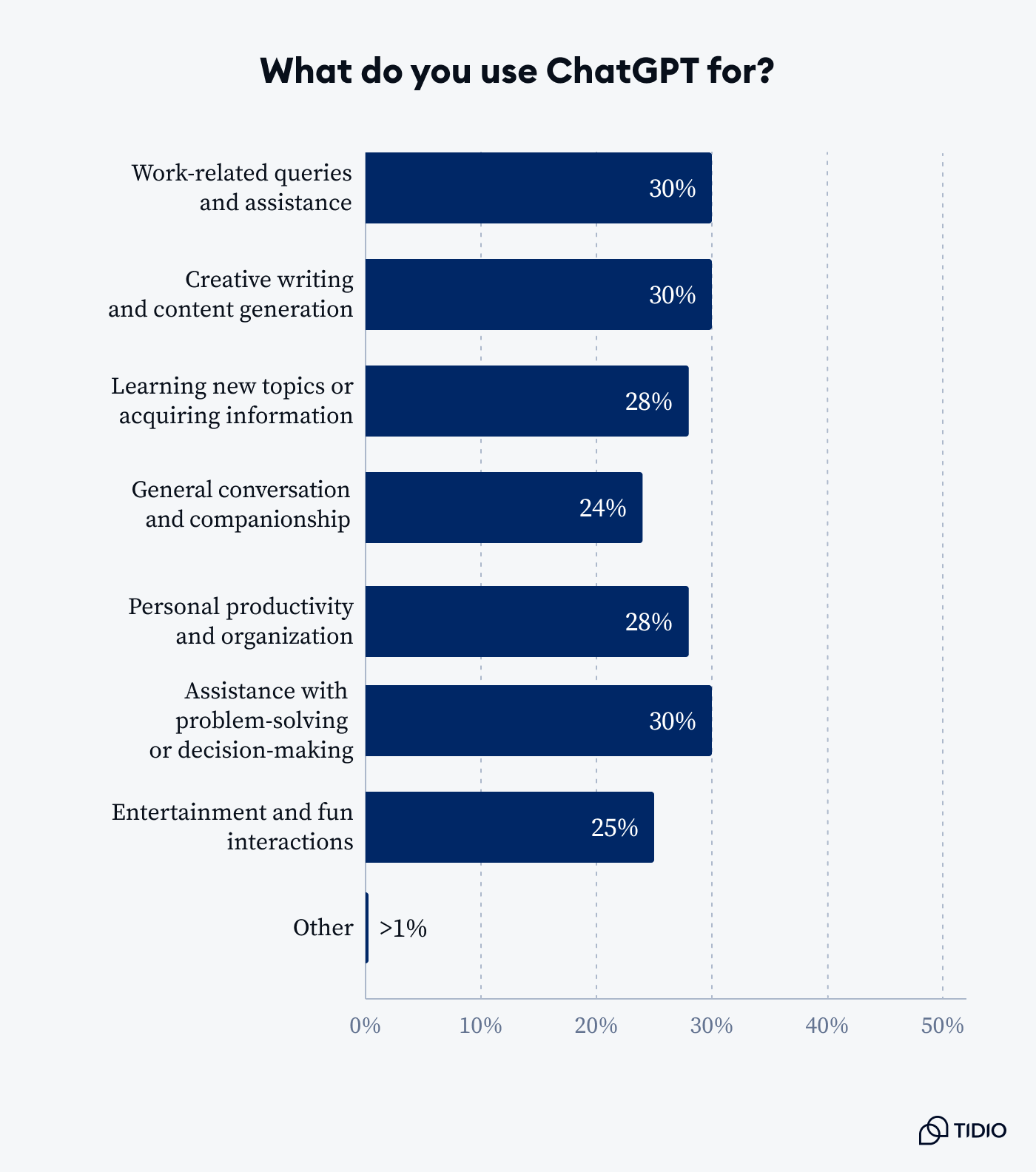

- A third (30%) use ChatGPT to assist them with decision-making, and another 30% are into solving work-related problems. As many as 23% just like to chat with AI

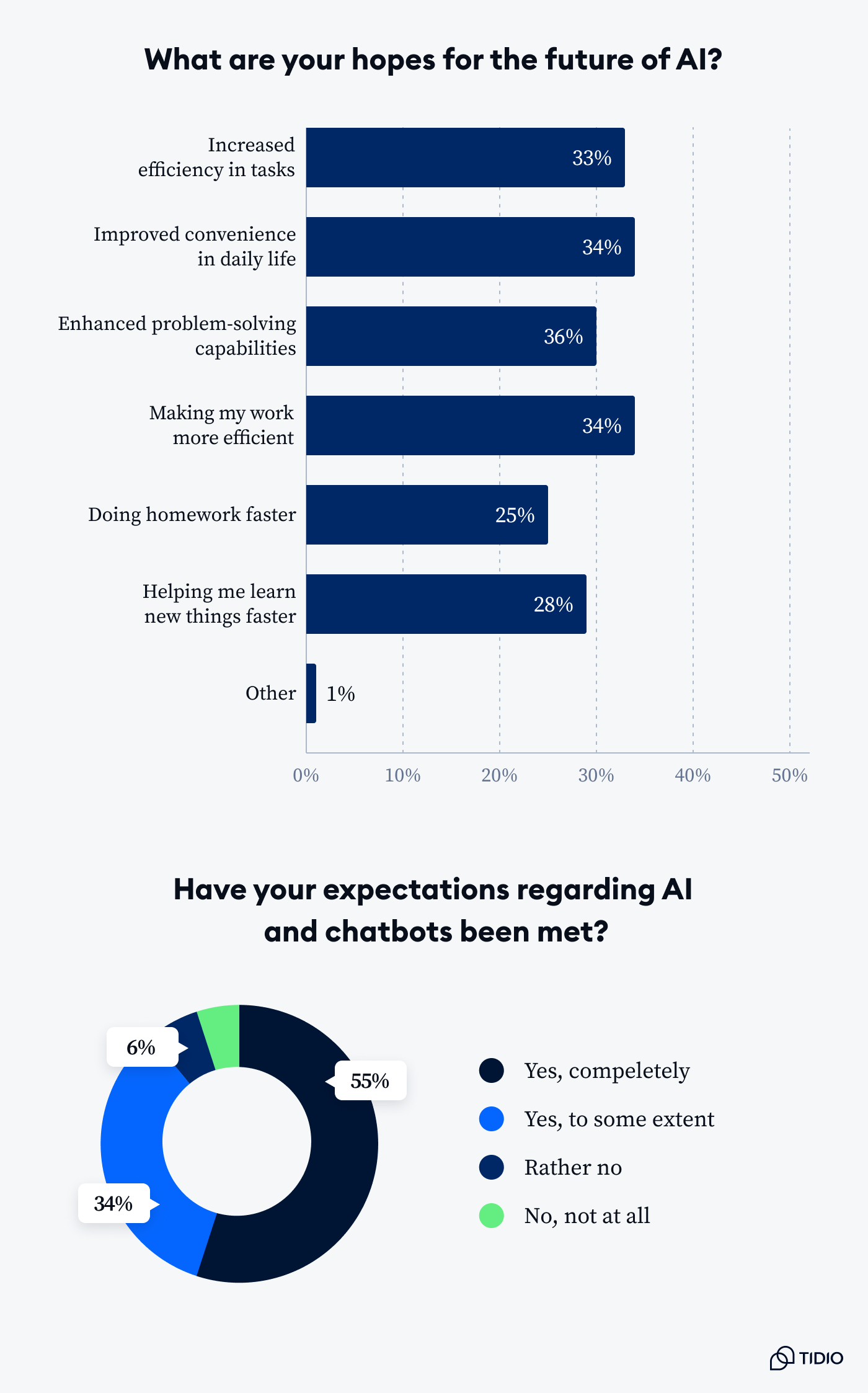

- Before trying generative AI, people expected enhanced convenience in daily life (34%), improved work efficiency (33%), better problem-solving (30%), and faster learning (29%)

- A whopping 89% of users state that their expectations from AI came true

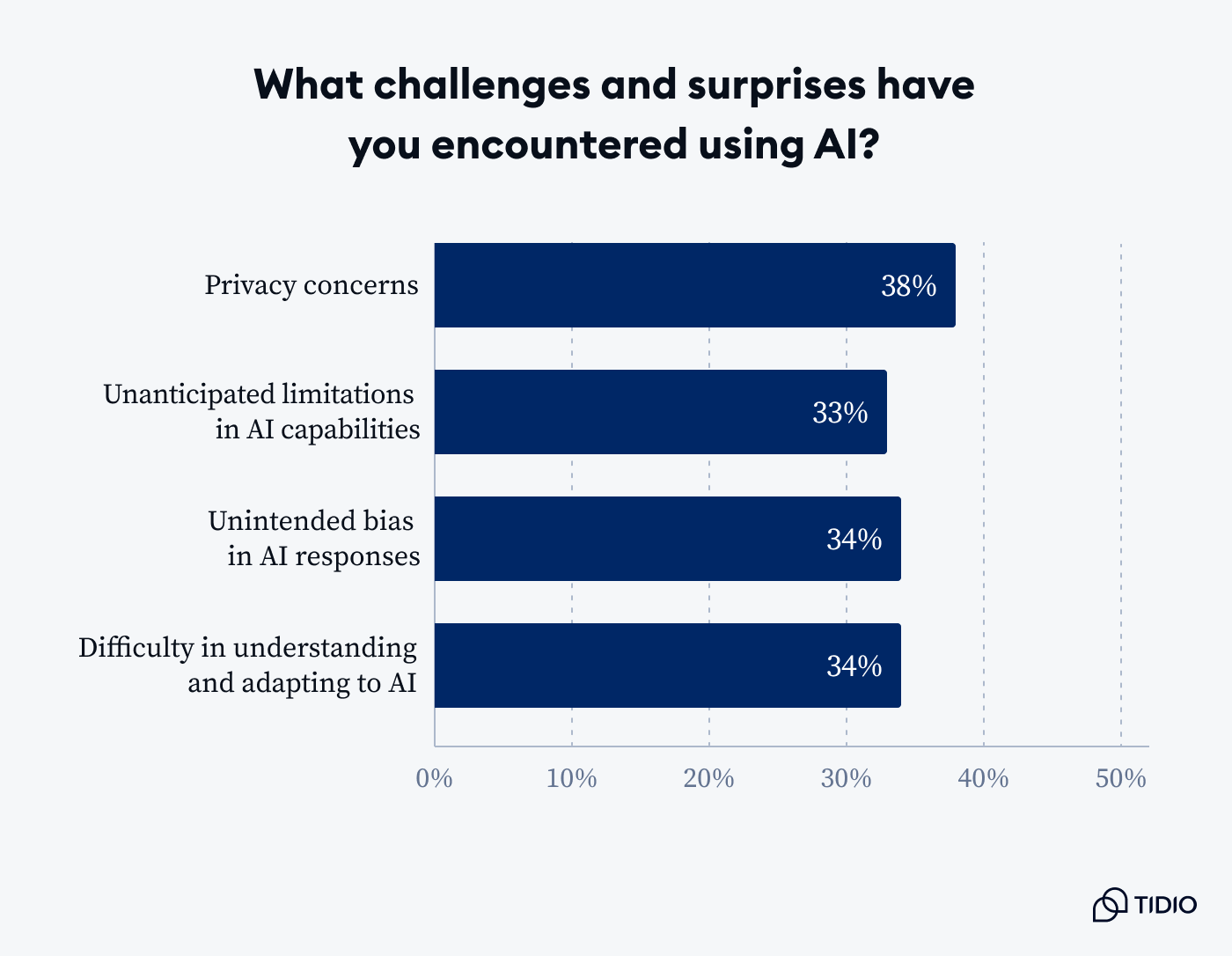

- About 38% note having privacy concerns when working with AI, 34% have had difficulties understanding or adapting to AI, and 33% have encountered biased responses

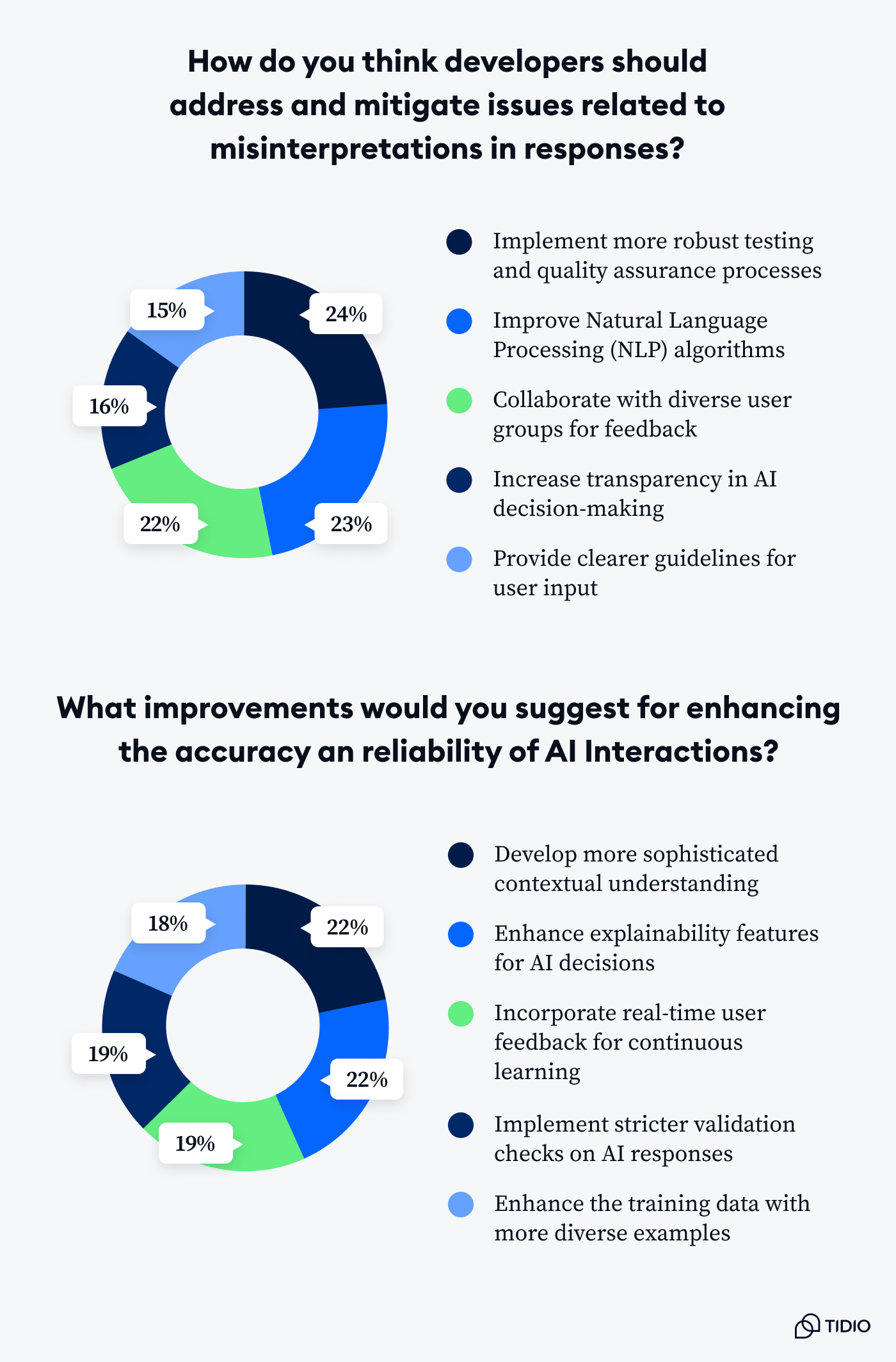

- About 24% believe that AI developers should implement more quality assurance processes to AI tools, while 23% want improved NLP algorithms. Around 22% want developers to collaborate with users for feedback, and 16% would like to see more transparency in AI decision-making

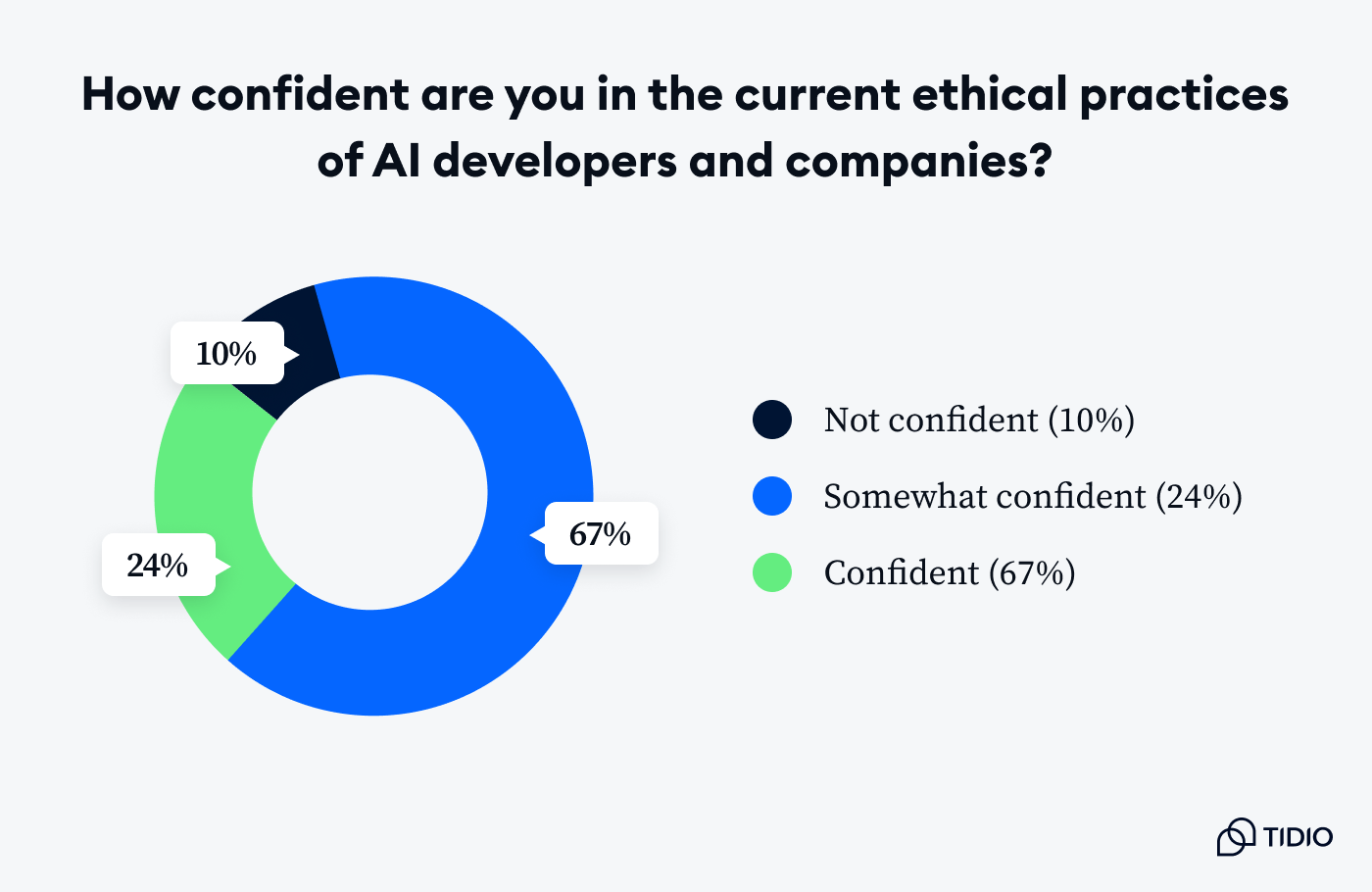

- Around 67% are confident in the ethical practices of AI development companies

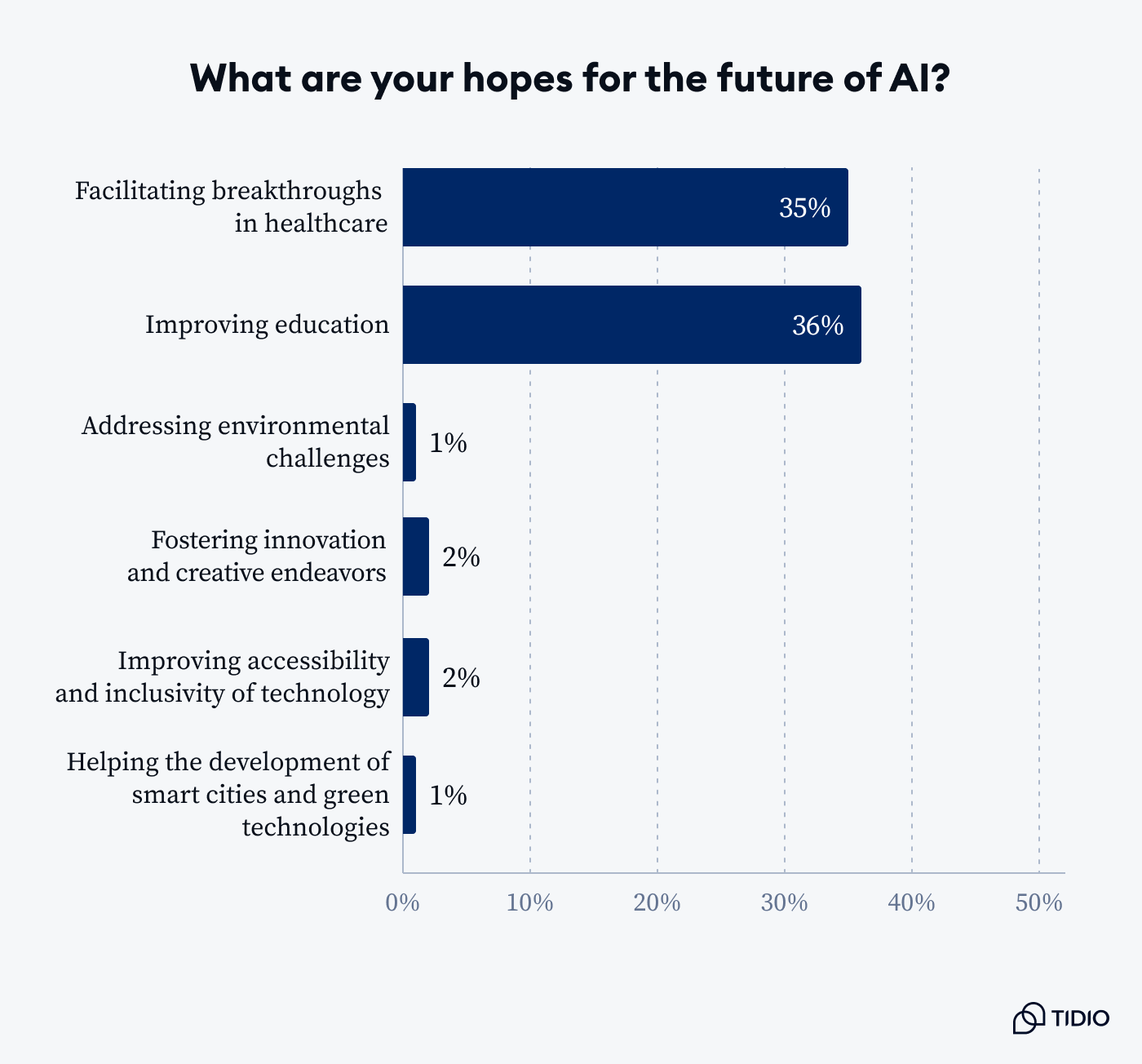

- As many as 35% hope AI will facilitate breakthroughs in medical research and healthcare, and 36% believe AI will enhance education and learning experience. Only 1% think AI can help address environmental challenges

- A third of the respondents (32%) fear job displacements, while 33% are scared of the lack of international cooperation when it comes to AI. Another 31% are afraid of the potential misuse of AI for malicious purposes

- About 37% of people expect AI to change communication and interactions in their daily lives

As you can see, AI has become an integral part of our lives in a very short time. It’s everywhere, and whether we like it or not, we have to adapt.

Those people who were skeptical about ChatGPT are active users now, and those who underestimated the impact of AI might have already suffered the consequences (e.g., getting laid off because AI can replace them).

Let’s take a closer look at how we arrived at this point and see where we are standing in this AI revolution.

The year of OpenAI

For an average user, their AI journey started with ChatGPT—a language model powered by GPT-3 and designed to interact with anyone in a chat-like format. That’s how most of us found out about OpenAI, machine learning, and faster opportunities for doing homework brought by AI (just kidding).

However, OpenAI’s early initiatives, such as the release of the OpenAI Gym toolkit and OpenAI Baselines in 2016-2017, already reflected a commitment to advancing the field of AI in the research community.

These early contributions laid the groundwork for subsequent breakthroughs, including the development of powerful language models like GPT-2 and, eventually, ChatGPT. The transition to a for-profit model in 2018 further signaled OpenAI’s dedication to securing the resources necessary to push the boundaries of AI research and development.

And they did push them. However, the sheer success of ChatGPT was surprising even for the company itself. In fact, on ChatGPT’s first anniversary, OpenAI’s head of sales Aliisa Rosenthal tweeted this:

Even the leaders’ team at OpenAI didn’t grasp the full potential of what they were about to release, let alone ordinary people.

Early adoption

Average users mostly found out about the new tool from social media (25%), online search (15%), word-of-mouth (10%), or OpenAI’s official website (10%).

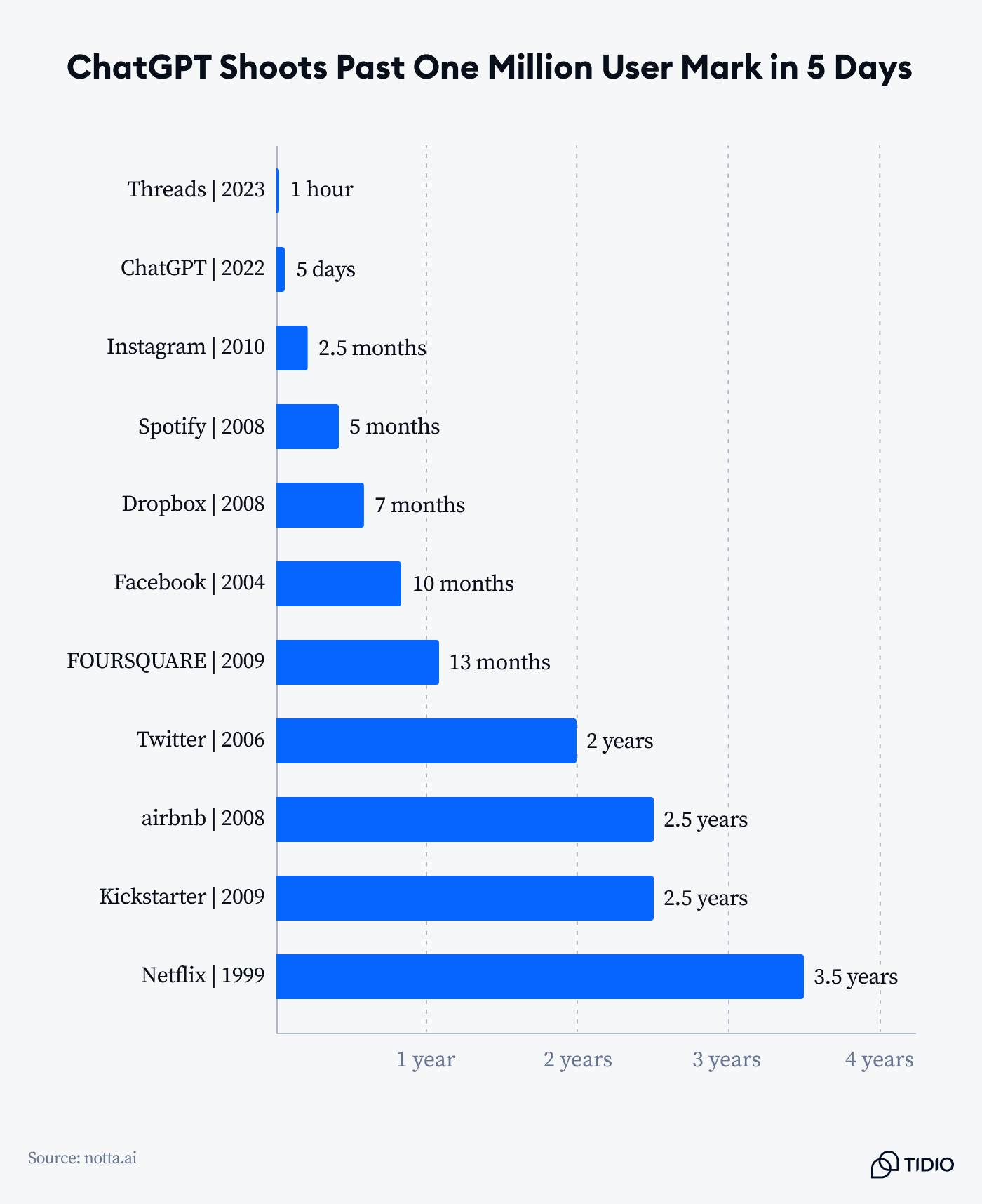

True, there were immediate social media posts, blog articles, and tweets that “ChatGPT would own this year”. What seemed to some like too much of a buzz turned out to be true: after almost 70 years of AI taking baby steps, ChatGPT took it to a new level. The new AI tool gained a whopping 1 million users in 5 days.

It might have been not even the most advanced milestone in global AI development. It was more about the public perception: you just have to enter a website, register for free, and get access to the smartest know-it-all. It knows answers to the most specific questions ever, can write you a college essay, and make your code work. Humans were, we dare say, flabbergasted.

Most users immediately tested out the new “pocket-PhD” tool. According to our respondents, 55% tried it immediately after it came out, and 34% gave ChatGPT some time. Still, one year in, 79% of them regularly interact with ChatGPT and similar generative AI solutions.

ChatGPT applications and usage

Currently, millions of people are regular users of ChatGPT. They use it for work, education, content creation, programming, recipe ideas, and many more things. Here’s what our respondents shared regarding their use of AI:

Of course, the list is not exhaustive. For instance, some people shared that they use ChatGPT and other generative AI tools as “sex chatbots”. To each their own, we guess… People also shared simple and effective use-cases that are pretty harmless. Here’s what one Reddit user said:

I use it for basic things. Like “make me a shopping list for a week, including chicken and beef dinners”. It's good for that. Idk why people use it to discuss political or philosophical ideas.

The bottom line is—

The lives of many people changed once ChatGPT came out. It might not feel this way, but if you use it, you surely accomplish certain things faster and more efficiently. When asked how their use of AI has changed throughout the years, most of our respondents noted using it more frequently than before (33%) and exploring new functionalities (37%).

Still, one fourth of respondents have indicated that their use of AI has declined. What is the reason for this?

Concerns and apprehensions

Even though 89% of people say that their expectations came true, AI still poses some challenges. Take a look at some surprises that our respondents have encountered:

Like anything else, AI has its own limitations, and we can’t take everything it generates for truth. As many as 76% have reported encountering AI hallucinations at least once in their interactions with AI. AI hallucinations are situations when a large language model (like GPT) creates false information and presents it as authentic. Turns out, it’s quite a common occurrence.

Read more: Check out our research on AI hallucinations and how to better avoid them.

So—

There is room for improvement for everyone, and AI development companies are no exception. They can take plenty of steps to make safer and more reliable AI tools.

Our respondents had some ideas on how it can be done.

Predictions and hopes

Still, there is plenty of hope. About 67% of our respondents are confident in the ethical practices of AI development companies, including OpenAI, and believe that AI-powered tools will change our world for the better.

Not everything causes so much optimism, though.

When asked how they envision AI positively impacting the world and humanity, people share the belief that there will be AI-powered breakthroughs in medical research and educational advancements.

However, there is significantly less trust in AI’s ability to address environmental challenges, improve accessibility, or even foster innovation. This might be connected to the fact that many people deem ChatGPT a “killer of creativity”. In the end, it can even write decent poetry in seconds, so some might wonder “Why do we even need artists?”

While AI is not omnipotent, it can still do a lot more than we can even imagine. True, it lacks essential human skills like empathy, critical thinking, or emotional intelligence, but we can’t argue with the fact that it’s much faster and more efficient than us humans.

Now—

Let’s take a look at how ChatGPT changed industries. Is it for the better or for the worse?

AI application and challenges across industries

ChatGPT has become widely used across the world in practically every industry. From marketing and non-profit research to fashion and retail—it’s a big helper to millions of people. Let’s take a closer look at how massively industries have been affected.

1. Tech

No doubt, ChatGPT has changed the tech world. Adding to the already shaky situation with global layoffs, the know-it-all tool seemed to some like a tempting replacement for costly human talent.

But there’s more to the story.

Microsoft sensed something big was coming back in 2019 and invested $1 million in OpenAI. They didn’t get disappointed: having early access to OpenAI advances, Microsoft quickly integrated OpenAI’s LLM into its Bing search engine.

This started a true arms race in the tech world, and suddenly, everything became about AI. It prompted giants like Google to frantically roll out their own AI bots, gave space for multiple new AI startups to appear, and shed light on GPT LLM’s biggest competitors like Anthropic. Very eventful, isn’t it?

ChatGPT set the global record for the fastest-growing user base. People flocked to try the new tool, so much that it wasn’t letting thousands in not to be overloaded in seconds. It prompted OpenAI to release the paid version ChatGPT Plus at $20 per month, which didn’t make people wait, among other things.

One year into the AI craze, ChatGPT has prompted practically everyone in the tech space to contemplate investing in AI technology. It has brought generative AI to the forefront of the public’s minds and convinced everyone that this is the future. Tech will never be the same, and we are yet to see how the story unfolds.

2. Healthcare

Apart from saving students from writing their essays themselves, ChatGPT and generative AI in general gave people hope for a positive change in spheres that truly matter. One of them is healthcare and medicine. Has AI brought about any significant change here?

It should be noted that ChatGPT can’t give any personalized medical advice, which is undoubtedly a good thing.

However, this doesn’t mean that the tool doesn’t know anything about the topic. As part of an experiment, ChatGPT managed to pass all three parts of the US Medical Licensing Exam (though just barely). What’s impressive is that it performed so well without ever being trained on a medical dataset.

Healthcare practitioners quickly explored ChatGPT’s potential to ease their workloads, conducting studies on its effectiveness in generating discharge summaries or radiology reports. Additionally, the pharmaceutical industry is using generative AI models to boost drug discovery efficiency, while tech giants are investigating their application for more accurate cancer targeting and other purposes.

Still, one cannot view ChatGPT’s successes with unwarranted optimism. Concerns have arisen over the potential for AI chatbots, including ChatGPT, to generate medical misinformation, perpetuate biases, and hinder medical education. In essence, the autonomous diagnosis of patients by machines is not something to expect in the near future. AI models like ChatGPT may sometimes assert themselves confidently, only to later prove statements false, posing potential risks in the medical field.

3. Education

Students were probably the happiest demographic when ChatGPT came out. Homework could be solved in seconds: from mathematical equations to essays to literature analysis to languages… The list goes on. Their teachers and professors were definitely less happy and started their own “war on AI”.

There were concerns that generative AI could lead to widespread cheating and plagiarism. Universities discussed revising plagiarism policies, and some US school districts outright banned ChatGPT. Nearly a year into the generative AI trend, educators’ initial concerns have shifted to pragmatism. Students now recognize the technology’s tendency to create false information. Teachers are prioritizing assignments that foster critical thinking, using AI to spark new classroom discussions.

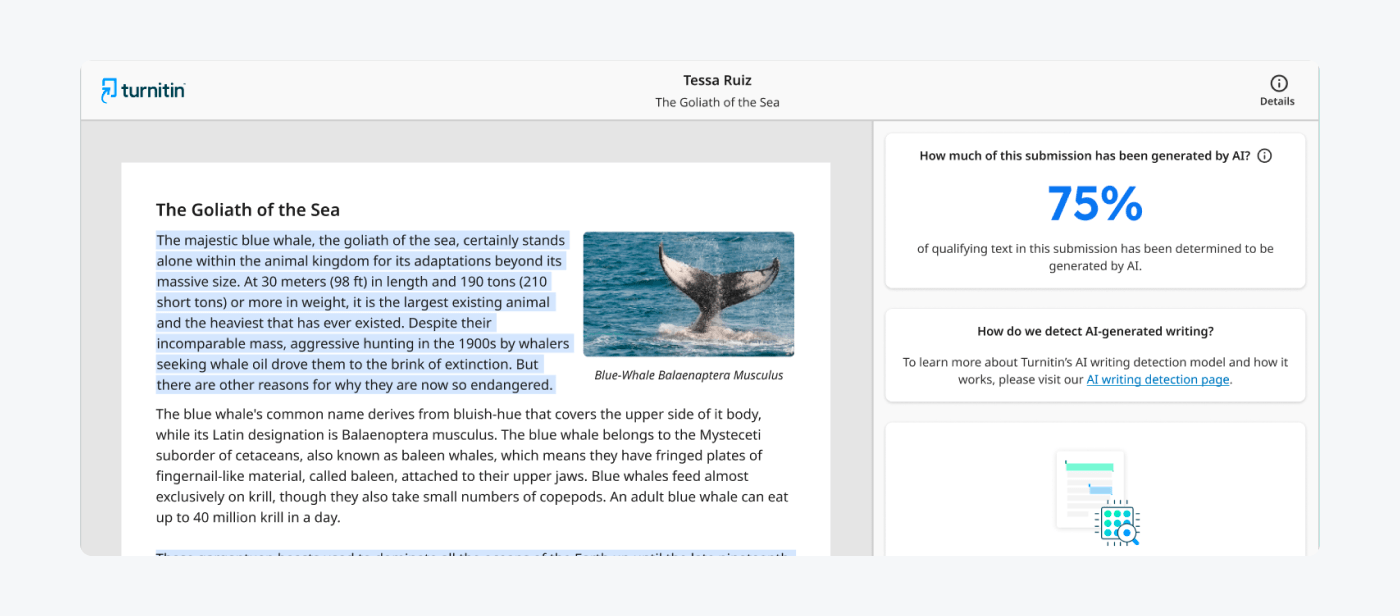

Still, the pursuit of identifying cheaters, whether through generative AI or other means, remains ongoing. Turnitin, a widely used plagiarism checker, has introduced an AI detection tool designed to pinpoint sections of a written piece that may have been generated by AI. Between April and July 2023, Turnitin assessed over 65 million submissions and identified that 10.3 percent of them contained AI-generated writing.

Approximately 3.3 percent of submissions were flagged as being about 80 percent AI-generated. However, these systems are not infallible, as Turnitin acknowledges a 4 percent false positive rate.

Due to the possibility of false positives, Turnitin suggests that educators engage in conversations with students rather than automatically failing them or accusing them of cheating. It makes sense, since the constraints of Turnitin’s tool in identifying AI-generated content mirror the limitations of generative AI itself.

Whether we like it or not, generative AI has changed education forever. Both teachers and students have to learn to live and work with it. Instead of banning AI tools and equating using ChatGPT to cheating, teachers might consider educating students on how to safely use the tool for their study and work. In the end, it will always be there, so we might as well befriend it.

4. Customer service

Customer service has become one of the most affected spheres when it comes to AI. Not in a bad way at all—AI has made it smoother and more efficient.

Dozens of companies immediately rushed to install chatbots powered by LLMs. They saw the potential and seized the chance, starting yet another AI arms race. However, it was probably worth it.

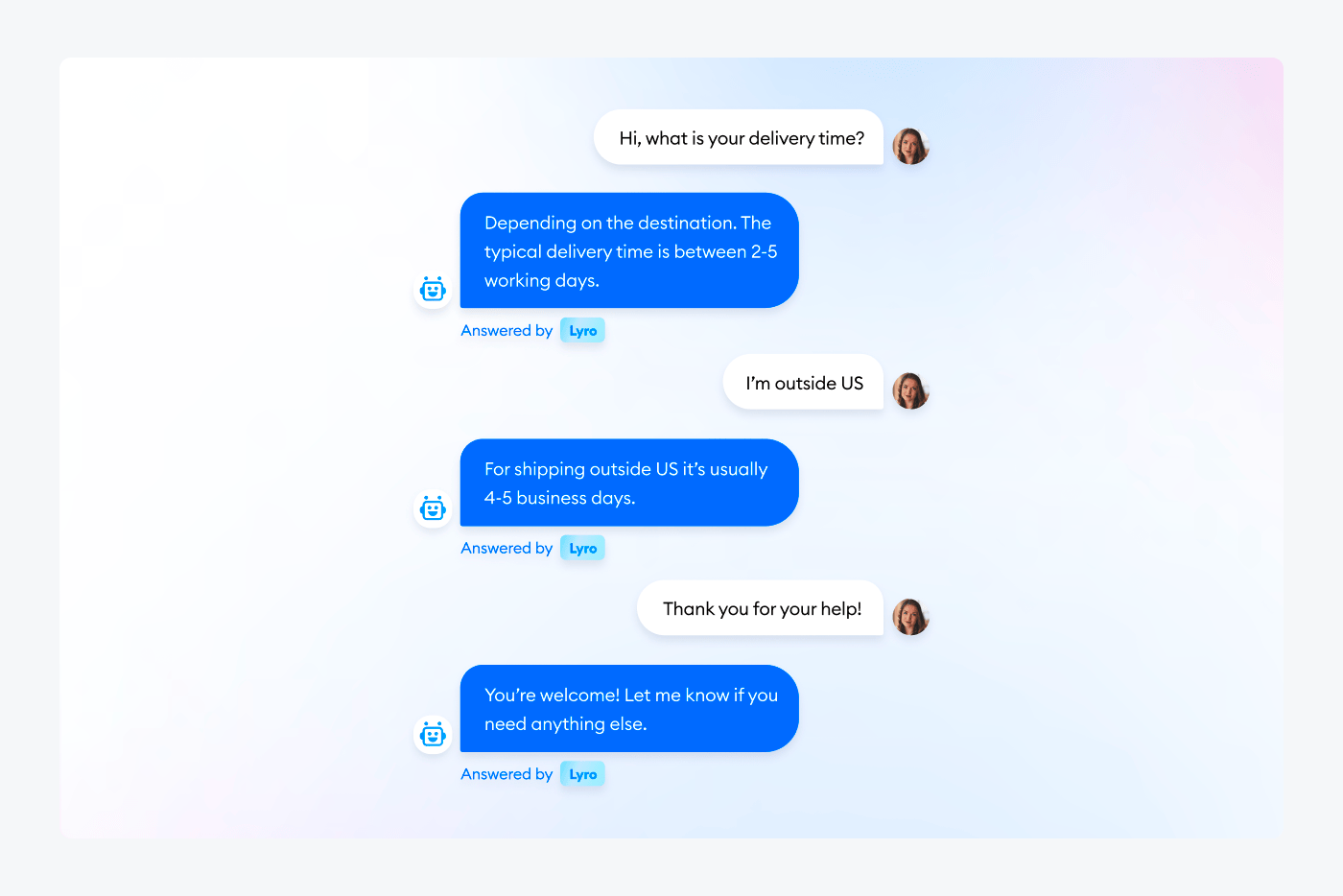

AI can make support processes more efficient, allows to cater to customers’ needs round the clock and in multiple languages, helps to analyze vast amounts of data, and can help drastically cut support costs. It’s fair to say that AI bots can allow for a stellar customer experience like no human could. Tools like Lyro by Tidio (powered by Anthropic) can take care of up to 70% of customer requests. No matter the size of a company, it’s a huge relief.

Read more: Check out our guide on AI in customer service and see how you can set up Lyro for your business.

However—

Generative AI has brought on a lot of changes. Did we expect them? Did we rejoice or fear them? How do we feel now, one year into the “AI race”?

AI adoption: fears and expectations

About 89% of people say their expectations from AI came true. They expected enhanced convenience in daily life (34%), improved work efficiency (33%), better problem solving (30%), faster learning (29%), help with homework (25%), and other improvements in their daily life. Some people didn’t expect much (and that came true for them, too).

Here’s what one Reddit user shared on their expectations vs. reality:

My expectations pretty much came true—it will be years at least before any AI product significantly impacts my life.

ChatGPT (and AI) is incredible as a technology, but its function so far is to be almost-as-good as things that already exist. I cannot reasonably see it noticeably raising the bar in any industry in the near future, except in how it makes already-achievable tasks less effort.

This is especially true for products like ChatGPT, Bard, etc, where guardrails and data privacy concerns strangle both the potential for output, and the appetite for input.

AI will be insane. I think anyone can see that. But not until open source catches up, and the development of AI becomes affordable and understood outside of major private organizations.”

While many things are made easy by generative AI, there is also a bitter pill to swallow: privacy concerns, AI hallucinations, job losses, and other negative consequences of the AI revolution.

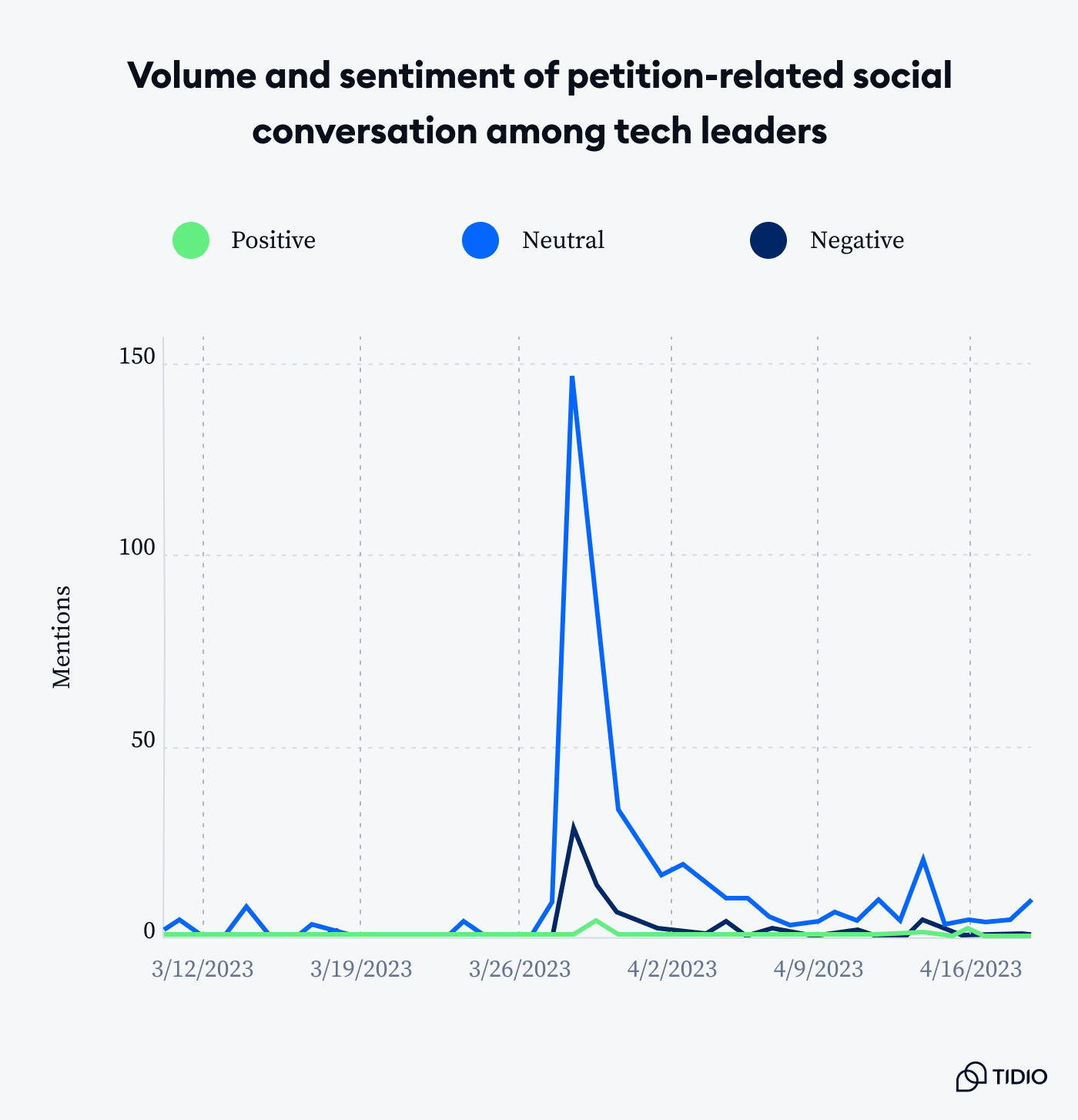

These concerns have been reinforced even by AI leaders at times. In spring 2023, hundreds of AI execs, business leaders, and public speakers sounded an anxious alarm about the threats of artificial intelligence.

OpenAI CEO Sam Altman warned lawmakers that “If this technology goes wrong, it can go quite wrong.” In March 2023, an open letter from Sam Altman was signed by numerous tech leaders, including billionaire entrepreneur Elon Musk and Apple co-founder Steve Wozniak. It advocated for a six-month hiatus in the development of AI systems and a substantial increase in government oversight.

The letter emphasized, “AI systems with human-competitive intelligence can pose profound risks to society and humanity.”

The statement was brief though powerful, containing just 22 words.

If people weren’t scared already, they definitely became so. While the general sentiment of tech leaders regarding the petition remained mostly neutral, the general public seemed to be more panicky.

One year into the development of GPT and other LLMs, the fear mostly died down. However, the words and signatures in the statement are still a reminder that we cannot let the technology get out of control.

But what about the governments around the world? They seem much more suspicious when it comes to AI. Let’s take a closer look.

Laws and regulations: trying to tame AI

There are quite a few legal implications surrounding ChatGPT. They include data privacy, potential copyright infringement, bias and inaccuracy prevention, and more. Depending on the local laws, countries are less or more concerned about ChatGPT and its usage. Let’s take a look at some of the issues and how they are fought.

Data privacy

OpenAI stores ChatGPT conversations for future model training, potentially raising legal concerns if users input confidential information later reproduced by the tool.

To address this, users can manually disable chat history and request OpenAI to delete past conversation content, offering a way to mitigate the risk of sensitive information being retained. OpenAI emphasizes compliance with privacy regulations like the California Consumer Privacy Act (CCPA) and the General Data Protection Regulation (GDPR) in the EU, ensuring the protection of citizens’ privacy rights.

However, the undisclosed use of such tools by businesses could lead to legal repercussions, as seen in the California Bot Disclosure Law. This law mandates explicit disclosure to consumers if generative AI tools are employed in consumer interactions, underscoring the importance of transparency in their utilization.

Copyright infringement

ChatGPT’s training involves extensive data from internet sources, possibly infringing on third-party intellectual property rights. EU regulators are developing legislation for transparency in AI algorithm training sources, though resolution remains uncertain. In the US, the Federal Trade Commission has used “algorithmic disgorgement”, compelling companies to delete algorithms trained on improperly sourced data.

According to OpenAI’s terms, users can reproduce ChatGPT outputs for any purpose, but issues arise as these outputs may not always be unique, potentially leading to legal complications for commercial use. OpenAI’s policy doesn’t explicitly address this. The responsibility for content lies with users, implying potential liability if copyrighted material is reproduced, although verifying this is challenging due to the tool’s inability to provide accurate citations.

Biases and misinformation

ChatGPT undergoes training on extensive datasets that might have concealed biases or limitations. The tool itself lacks the capability to comprehend the ramifications of its outputs. Despite OpenAI’s endeavors to fix these issues, ChatGPT isn’t consistently reliable and may occasionally produce responses containing discriminatory or inaccurate information.

For instance, in April 2023, an Australian mayor initiated a defamation lawsuit against OpenAI due to ChatGPT’s erroneous claim that he was arrested and charged with bribery in 2012.

It’s crucial to validate the accuracy of AI-generated responses against reliable sources and to critically assess the risk of bias on any given topic.

Read more: Check out our article on AI biases full of examples from ChatGPT and other tools.

There’s so much happening in the AI space every day. What does the future hold for us? Where are we going when it comes to AI?

A recap of the year in prompts: reflections and takeaways

The future of ChatGPT and AI appears promising, with vast potential for the development of even more sophisticated language models. While the current version of ChatGPT still has limitations, ongoing advancements in natural language processing suggest that future iterations of LLMs will likely become significantly more sophisticated and capable.

ChatGPT has the capacity to revolutionize diverse industries, including customer service, education, medicine, personal productivity, and content creation. Across these domains, the natural language generation and understanding capabilities of ChatGPT hold the potential to enhance efficiency, accuracy, and overall outcomes.

However, as ChatGPT becomes more pervasive, ethical considerations come into play. The increasing power of this technology necessitates a careful examination of its usage. It is crucial to evaluate whether ChatGPT is being employed in ways that are fair, responsible, and ethical as the technology continues to evolve.

With great power comes great responsibility. We should never forget that when using ChatGPT or any AI in particular. With this thought in mind, it’s a good idea to kick back, relax, and responsibly enjoy all the benefits AI has brought us.

Sources

- The Year of ChatGPT and Living Generatively

- On ChatGPT’s one-year anniversary, it has more than 1.7 billion users—here’s what it may do next

- Performance of ChatGPT on USMLE: Potential for AI-Assisted Medical Education Using Large Language Models

- The Future of ChatGPT: Predictions and Opportunities

- A year of ChatGPT: 5 ways the AI marvel has changed the world

- ChatGPT one year on: who is using it, how and why?

- AI Biases: Are We Ready to Be Stereotyped by Robots?

- When Machines Dream: A Dive in AI Hallucinations [Study]

- ChatGPT (We need to talk)

- ChatGPT is going to change education, not destroy it

- Don’t Ban ChatGPT in Schools. Teach With It.

- AI Customer Service: All You Need to Know [+ Examples]

- OpenAI’s turbulent weekend

- Statement on AI Risk

- AI leaders warn the technology poses ‘risk of extinction’ like pandemics and nuclear war

- What is the history of artificial intelligence (AI)?

- Europe takes aim at ChatGPT with what might soon be the West’s first A.I. law. Here’s what it means

- What Are the Legal Implications of ChatGPT?

Methodology

For this study about a year of AI usage, we collected responses from 896 people. We used Reddit and Amazon Mechanical Turk.

Our respondents were 75% male, 24% female, and ~1% non-binary. Most of the participants (76%) are Millennials aged between 25 and 41. Other aged groups represented are Gen X (15%), Gen Z (6%), and Baby boomers (2%).

Respondents had to answer 25 questions, most of which were multiple-choice or scale-based ones. The survey had an attention check question.

Fair use statement

Did our research contribute to your understanding of AI? Feel free to share the findings from this study, ensuring you acknowledge the source and provide a link to this page. Thank you!